When talking about the mathematization of science, most often they mean only the purely pragmatic use of computational methods, forgetting the apt statement of A. A. Lyubishchev about mathematics as not so much a servant, but the queen of all sciences. It is the level of mathematization that brings this or that science into the category of exact ones, if by this we mean not the use of exact quantitative estimates, but a high level of abstraction, freedom to operate with concepts related to the categories of non-numerical mathematics.

Among the methods of such qualitative mathematics that have found effective application in chemistry, the main role belongs to sets, groups, algebras, topological constructions and, first of all, graphs - the most general method of representing chemical structures.

Let's take, for example, four points arbitrarily located on a plane or in space, and connect them with three lines. No matter how these points (called vertices) are located and no matter how they are connected to each other by dashes (called edges), we will get only two possible graph structures, differing from each other in the mutual arrangement of connections: one graph, similar to the letters "P" " or "I", and another graph similar to the letters "T", "E" or "U". If instead of four abstract points we take four carbon atoms, and instead of dashes we take chemical bonds between them, then the two indicated graphs will correspond to two possible isomers of butane - normal and iso-structure.

What is the reason for the growing interest of chemists in graph theory, this bizarre but very simple language of dots and lines?

The graph has the remarkable property that it remains unchanged under any deformations of the structure that are not accompanied by a break in the connections between its elements. The structure of a graph can be distorted, completely depriving it of symmetry in the usual sense; however, the graph will still have symmetry in the topological sense, determined by the sameness and interchangeability of the end vertices. Given this hidden symmetry, one can, for example, predict the number of different isomeric amines obtained from the structures of butane and isobutane by replacing carbon atoms with nitrogen atoms; graphs make it possible to use simple physical considerations to understand patterns of the “structure property” type.

Another, somewhat unexpected idea is to express the structural qualities of graphs (for example, the degree of their branching) using numbers. Intuitively, we feel that isobutane is more branched than normal butane; This can be expressed quantitatively, say, by the fact that in the isobutane molecule the structural fragment of propane is repeated three times, and in normal butane it is repeated only twice. This structural number (called the Wiener topological index) correlates surprisingly well with characteristics of saturated hydrocarbons such as boiling point or heat of combustion. Recently, a peculiar fashion has appeared for the invention of various topological indices; there are already more than twenty of them; Its alluring simplicity makes this Pythagorean method increasingly popular *.

The use of graph theory in chemistry is not limited to the structure of molecules. Back in the thirties, A. A. Balandin, one of the predecessors of modern mathematical chemistry, proclaimed the principle of isomorphic substitution, according to which the same graph carries uniform information about the properties of the most diverse structured objects; it is only important to clearly define which elements are selected as vertices and what kind of relationships between them will be expressed by edges. So, in addition to atoms and bonds, you can select phases and components, isomers and reactions, macromolecules and interactions between them as vertices and edges. One can notice a deep topological relationship between the Gibbs phase rule, the stoichiometric Horiuchi rule and the rational classification of organic compounds according to the degree of their unsaturation. With the help of graphs, interactions between elementary particles, crystal fusion, cell division are successfully described... In this sense, graph theory serves as a visual, almost universal language of interdisciplinary communication.

The development of each scientific idea traditionally goes through the following stages: article review monograph textbook. The inflorescence of ideas called mathematical chemistry has already passed the stage of reviews, although it has not yet reached the status of an academic discipline. Due to the diversity of areas, the main form of publications in this area is now collections; several such collections were published in 1987-1988.

The first collection edited by R. King “Chemical applications of topology and graph theory” (M., “Mir”, 1987) contains a translation of reports from an international symposium with the participation of chemists and mathematicians from different countries. The book gives a complete picture of the motley palette of approaches that emerged at the intersection of graph theory and chemistry. It touches on a very wide range of issues, starting from the algebraic structure of quantum chemistry and stereochemistry, the magic rules of electronic counting, and ending with the structure of polymers and the theory of solutions. Organic chemists will undoubtedly be attracted by the new strategy for the synthesis of trefoil-type molecular knots, an experimental implementation of the idea of a molecular Möbius strip. Of particular interest will be review articles on the use of the topological indices already mentioned above to assess and predict a wide variety of properties, including the biological activity of molecules.

The translation of this book is also useful because the issues raised in it may help resolve a number of debatable problems in the field of methodology of chemical science. Thus, the rejection by some chemists in the 50s of the mathematical symbolism of resonance formulas gave way in the 70s to the denial by some physicists of the very concept of chemical structure. Within the framework of mathematical chemistry, such contradictions can be eliminated, for example, using a combinatorial-topological description of both classical and quantum chemical systems.

Although the works of Soviet scientists are not presented in this collection, it is gratifying to note the increased interest in the problems of mathematical chemistry in domestic science. An example is the first workshop “Molecular graphs in chemical research” (Odessa, 1987), which brought together about a hundred specialists from all over the country. Compared to foreign research, domestic work is distinguished by a more pronounced applied nature, focus on solving problems of computer synthesis, and creating various data banks. Despite the high level of reports, the meeting noted an unacceptable lag in the training of specialists in mathematical chemistry. Only at Moscow and Novosibirsk universities are occasional courses given on individual issues. At the same time, it is time to seriously raise the question: what kind of mathematics should chemistry students study? Indeed, even in university mathematical programs of chemical departments such sections as the theory of groups, combinatorial methods, theory of graphs, and topology are practically not represented; in turn, university mathematicians do not study chemistry at all. In addition to the problem of training, the issue of scientific communications is urgent: an all-Union journal on mathematical chemistry is needed, published at least once a year. The journal "MATCH" (Mathematical Chemistry) has been published abroad for many years, and our publications are scattered across collections and a wide variety of periodicals.

Until recently, the Soviet reader could get acquainted with mathematical chemistry only from the book by V. I. Sokolov “Introduction to Theoretical Stereochemistry” (M.: Nauka, 1979) and the brochure by I. S. Dmitriev “Molecules without Chemical Bonds” (L.: Khimiya , 1977). Partially filling this gap, the Siberian branch of the Nauka publishing house published last year the book “Application of Graph Theory in Chemistry” (edited by N. S. Zefirov, S. I. Kuchanov). The book consists of three sections, with the first devoted to the use of graph theory in structural chemistry; the second part examines reaction graphs; the third shows how graphs can be used to facilitate the solution of many traditional problems in polymer chemical physics. Of course, this book is not yet a textbook (a significant part of the ideas discussed are original results of the authors); nevertheless, the first part of the collection can be fully recommended for an initial acquaintance with the subject.

Another collection proceedings of the seminar of the Faculty of Chemistry of Moscow State University “Principles of symmetry and systematicity in chemistry” (edited by N. F. Stepanov) was published in 1987. The main topic of the collection is group-theoretic, graph-theoretic and system-theoretic methods in chemistry. The range of issues discussed is unconventional, and the answers to them are even less standard. The reader will learn, for example, about the reasons for the three-dimensionality of space, about the possible mechanism for the occurrence of dissymmetry in living nature, about the principles of designing the periodic system of molecules, about the planes of symmetry of chemical reactions, about the description of molecular forms without using geometric parameters, and much more. Unfortunately, the book can only be found in scientific libraries, since it has not gone on general sale.

Since we are talking about the principles of symmetry and systematicity in science, it is impossible not to mention another unusual book “System. Symmetry. Harmony” (M.: Mysl, 1988). This book is dedicated to one of the variants of the so-called general theory of systems (GTS), proposed and developed by Yu.A. Urmantsev and which today has found the largest number of supporters among scientists of various specialties, both natural and humanities. The initial principles of Urmantsev's OTS are the concepts of system and chaos, polymorphism and isomorphism, symmetry and asymmetry, as well as harmony and disharmony.

It seems that Urmantsev’s theory should attract the closest attention of chemists, if only because it traditionally elevates the chemical concepts of composition, isomerism, and dissymmetry to the rank of system-wide ones. In the book you can find striking symmetry analogues for example between isomers of leaves and molecular structures **. Of course, when reading the book, in some places a certain level of professional impartiality is necessary - for example, when it comes to chemical-musical parallels or the rationale for a mirror-symmetrical system of elements. Nevertheless, the book is permeated by the central idea of finding a universal language expressing the unity of the universe, akin to which is perhaps the Castalian language of the “bead game” by Hermann Hess.

Speaking about the mathematical structures of modern chemistry, one cannot ignore the wonderful book by A. F. Bochkov and V. A. Smith “Organic Synthesis” (M.: Nauka, 1987). Although its authors are “pure” chemists, a number of ideas discussed in the book are very close to the problems raised above. Without dwelling on the brilliant form of presentation and depth of content of this book, after reading which you want to take up organic synthesis, we will emphasize only two points. Firstly, considering organic chemistry through the prism of its contribution to world science and culture, the authors draw a clear parallel between chemistry and mathematics as universal sciences that draw the objects and problems of their research from within themselves. In other words, to the traditional status of mathematics as the queen and servant of chemistry, we can add the peculiar hypostasis of its sister. Secondly, convincing the reader that organic synthesis is an exact science, the authors appeal to the accuracy and rigor of both structural chemistry itself and to the perfection of the logic of chemical ideas.

If experimenters say so, is there any doubt that the hour of mathematical chemistry has come?

________________________

* See "Chemistry and Life", 1988, No. 7, p. 22.

** See "Chemistry and Life", 1989, No. 2.

Graphs serve primarily as a means of representing molecules. When describing a molecule topologically, it is depicted in the form of a molecular graph (MG), where vertices correspond to atoms and edges correspond to chemical bonds (graph-theoretic model of a molecule). Typically, only skeletal atoms are considered in this representation, for example, hydrocarbons with “erased” hydrogen atoms.

The valency of chemical elements imposes certain restrictions on the degrees of vertices. For alkan trees (connected graphs that do not have cycles), the degrees of vertices (r) cannot exceed four (r = 1, 2, 3, 4).

Graphs can be specified in matrix form, which is convenient when working with them on a computer.

The adjacency matrix of the vertices of a simple graph is a square matrix A = [aσχ] with elements aσχ = 1 if the vertices σ and χ are connected by an edge, and σχ = 0 otherwise. The distance matrix is a square matrix D = with elements dσχ, defined as the minimum number of edges (shortest distance) between vertices σ and χ. Sometimes matrices of adjacency and distances along edges (A e and D e) are also used.

The form of matrices A and D (A e and D e) depends on the method of numbering the vertices (or edges), which causes inconvenience when handling them. To characterize the graph, graph invariants are used - topological indices (TI).

Number of paths of length one

pi = xss 0 = m = n-1

Number of paths of length two

Number of triplets of adjacent edges (with a common vertex)

![]()

Wiener number (W), defined as half the sum of the elements of the distance matrix of the graph under consideration:

![]() etc.

etc.

The methodology for studying the structure-property relationship through topological indices in the graph-theoretic approach includes the following steps.

Selection of research objects (training sample) and analysis of the state of numerical data on property P for a given range of compounds.

Selection of TIs taking into account their discriminatory ability, correlation ability with properties, etc.

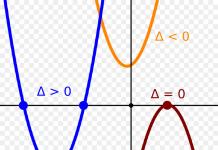

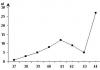

Study of graphical dependencies “Property P - TI of a molecule graph”, for example, P on n - the number of skeletal atoms, P on W - Wiener number, etc.

Establishing a functional (analytical) relationship P = _DTI), for example,

P = a(TI) + b,

P = aln(TI) + b,

P = a(TI) 1 +b(TI) 2 +...+n(TI) n +c

etc. Here a, b, c are some parameters (they should not be confused with the parameters of additive circuits) to be determined.

Numerical calculations of P, comparison of calculated values with experimental ones.

Prediction of the properties of compounds that have not yet been studied or even obtained (outside this sample).

Topological indices are also used in the construction of additive calculation and forecasting schemes. They can be used in the development of new drugs, in assessing the carcinogenic activity of certain chemicals, in predicting the relative stability of new (not yet synthesized) compounds, etc.

However, it should be remembered that the choice of TI is often random; they may not reflect important structural features of molecules or duplicate information (obtained using other indices), and calculation schemes may not have a solid theoretical foundation and are difficult to physicochemical interpretation.

The team of the Department of Physical Chemistry of Tver State University has been conducting computational and theoretical research on the problem “Relationship of the properties of substances with the structure of molecules: mathematical (computer) modeling” for many years. The focus is on the targeted search for new structures, algorithms for solving a number of graph-theoretic and combinatorial problems that arise during the collection and processing of information about the structure and properties of substances, the creation of expert information retrieval systems and databases, the development quantitative methods of calculation and forecasting.

We constructed additive schemes and found analytical dependences of the form P = Y(TI) for a number of organic and other molecules. Using the obtained formulas, numerical calculations of the physicochemical properties of the compounds under consideration were performed, p.

References

- Vinogradova M.G., Papulov Yu.G., Smolyakov V.M. Quantitative correlations of “structure properties” of alkanes. Additive calculation schemes. Tver, 1999. 96 p.

- Chemical applications of topology and graph theory / Ed. R. King. M.: Mir, 1987. 560 p.

- Application of graph theory in chemistry / Ed. N.S. Zefirov and S.I. Kuchanova. Novo-Sibirsk: Nauka, 1988. 306 p.

- Stankevich M.I., Stankevich I.V., Zefirov N.S. Topological indices in organic chemistry // Advances in Chemistry. 1988. T.57, No. 3, P.337-366.

- Vinogradova M.G., Saltykova M.N. Graph-theoretical approach to studying the relationship between the structure and properties of alkylsilanes. // Fundamental Research, 2009. No. 1. pp. 17-19.

- Vinogradova M.G., Saltykova M.N., Efremova A.O., Malchevskaya O.A. The relationship between the structure and properties of alkylsilanes // Advances in modern natural science, No. 1, 2010. P. 136-137.

- Vinogradova M.G., Saltykova M.N., Efremova A.O. “Structure-property” correlations of alkylsilanes: a graph-theoretical approach // Advances in modern science, No. 3, 2010. P. 141-142.

Bibliographic link

Vinogradova M.G. GRAPH THEORY IN CHEMISTRY // International Journal of Applied and Fundamental Research. – 2010. – No. 12. – P. 140-142;URL: http://dev.applied-research.ru/ru/article/view?id=1031 (access date: 12/17/2019). We bring to your attention magazines published by the publishing house "Academy of Natural Sciences" 1 Over the past decades, the concepts of topology and graph theory have become widespread in theoretical chemistry. They are useful in searching for quantitative relationships “structure-property” and “structure-activity”, as well as in solving graph-theoretic and combinatorial-algebraic problems that arise during the collection, storage and processing of information on structure and properties substances.

Graphs serve primarily as a means of representing molecules. When describing a molecule topologically, it is depicted in the form of a molecular graph (MG), where vertices correspond to atoms and edges correspond to chemical bonds (graph-theoretic model of a molecule). Typically, only skeletal atoms are considered in this representation, for example, hydrocarbons with “erased” hydrogen atoms.

The valency of chemical elements imposes certain restrictions on the degrees of vertices. For alkan trees (connected graphs that do not have cycles), the degrees of vertices (r) cannot exceed four (r = 1, 2, 3, 4).

Graphs can be specified in matrix form, which is convenient when working with them on a computer.

The adjacency matrix of the vertices of a simple graph is a square matrix A = [aσχ] with elements aσχ = 1 if the vertices σ and χ are connected by an edge, and σχ = 0 otherwise. The distance matrix is a square matrix D = with elements dσχ, defined as the minimum number of edges (shortest distance) between vertices σ and χ. Sometimes matrices of adjacency and distances along edges (A e and D e) are also used.

The form of matrices A and D (A e and D e) depends on the method of numbering the vertices (or edges), which causes inconvenience when handling them. To characterize the graph, graph invariants are used - topological indices (TI).

Number of paths of length one

pi = xss 0 = m = n-1

Number of paths of length two

Number of triplets of adjacent edges (with a common vertex)

![]()

Wiener number (W), defined as half the sum of the elements of the distance matrix of the graph under consideration:

![]() etc.

etc.

The methodology for studying the structure-property relationship through topological indices in the graph-theoretic approach includes the following steps.

Selection of research objects (training sample) and analysis of the state of numerical data on property P for a given range of compounds.

Selection of TIs taking into account their discriminatory ability, correlation ability with properties, etc.

Study of graphical dependencies “Property P - TI of a molecule graph”, for example, P on n - the number of skeletal atoms, P on W - Wiener number, etc.

Establishing a functional (analytical) relationship P = _DTI), for example,

P = a(TI) + b,

P = aln(TI) + b,

P = a(TI) 1 +b(TI) 2 +...+n(TI) n +c

etc. Here a, b, c are some parameters (they should not be confused with the parameters of additive circuits) to be determined.

Numerical calculations of P, comparison of calculated values with experimental ones.

Prediction of the properties of compounds that have not yet been studied or even obtained (outside this sample).

Topological indices are also used in the construction of additive calculation and forecasting schemes. They can be used in the development of new drugs, in assessing the carcinogenic activity of certain chemicals, in predicting the relative stability of new (not yet synthesized) compounds, etc.

However, it should be remembered that the choice of TI is often random; they may not reflect important structural features of molecules or duplicate information (obtained using other indices), and calculation schemes may not have a solid theoretical foundation and are difficult to physicochemical interpretation.

The team of the Department of Physical Chemistry of Tver State University has been conducting computational and theoretical research on the problem “Relationship of the properties of substances with the structure of molecules: mathematical (computer) modeling” for many years. The focus is on the targeted search for new structures, algorithms for solving a number of graph-theoretic and combinatorial problems that arise during the collection and processing of information about the structure and properties of substances, the creation of expert information retrieval systems and databases, the development quantitative methods of calculation and forecasting.

We constructed additive schemes and found analytical dependences of the form P = Y(TI) for a number of organic and other molecules. Using the obtained formulas, numerical calculations of the physicochemical properties of the compounds under consideration were performed, p.

References

- Vinogradova M.G., Papulov Yu.G., Smolyakov V.M. Quantitative correlations of “structure properties” of alkanes. Additive calculation schemes. Tver, 1999. 96 p.

- Chemical applications of topology and graph theory / Ed. R. King. M.: Mir, 1987. 560 p.

- Application of graph theory in chemistry / Ed. N.S. Zefirov and S.I. Kuchanova. Novo-Sibirsk: Nauka, 1988. 306 p.

- Stankevich M.I., Stankevich I.V., Zefirov N.S. Topological indices in organic chemistry // Advances in Chemistry. 1988. T.57, No. 3, P.337-366.

- Vinogradova M.G., Saltykova M.N. Graph-theoretical approach to studying the relationship between the structure and properties of alkylsilanes. // Fundamental Research, 2009. No. 1. pp. 17-19.

- Vinogradova M.G., Saltykova M.N., Efremova A.O., Malchevskaya O.A. The relationship between the structure and properties of alkylsilanes // Advances in modern natural science, No. 1, 2010. P. 136-137.

- Vinogradova M.G., Saltykova M.N., Efremova A.O. “Structure-property” correlations of alkylsilanes: a graph-theoretical approach // Advances in modern science, No. 3, 2010. P. 141-142.

Bibliographic link

Vinogradova M.G. GRAPH THEORY IN CHEMISTRY // International Journal of Applied and Fundamental Research. – 2010. – No. 12. – P. 140-142;URL: https://applied-research.ru/ru/article/view?id=1031 (access date: 12/17/2019). We bring to your attention magazines published by the publishing house "Academy of Natural Sciences"

To create automated program complexes. synthesis optimal. highly reliable production (including resource-saving) along with the principles of the arts. intelligence, they use oriented semantic, or semantic, graphs of CTS solution options. These graphs, which in a particular case are trees, depict procedures for generating a set of rational alternative CTS schemes (for example, 14 possible when separating a five-component mixture of target products by rectification) and procedures for the ordered selection among them of a scheme that is optimal according to a certain criterion system efficiency (see Optimization).

Graph theory is also used to develop algorithms for optimizing time schedules for the operation of multi-product flexible equipment, optimization algorithms. placement of equipment and routing of pipeline systems, optimal algorithms. management of chemical technology processes and production, during network planning of their work, etc.

Lit.. Zykov A. A., Theory of finite graphs, [in. 1], Novosibirsk, 1969; Yatsimirsky K. B., Application of graph theory in chemistry, Kyiv, 1973; Kafarov V.V., Perov V.L., Meshalkin V.P., Principles of mathematical modeling of chemical technological systems, M., 1974; Christofides N., Graph theory. Algorithmic approach, trans. from English, M., 1978; Kafarov V.V., Perov V.L., Meshalkin V.P., Mathematical foundations of computer-aided design of chemical production, M., 1979; Chemical applications of topology and graph theory, ed. R. King, trans. from English, M., 1987; Chemical Applications of Graph Theory, Balaban A.T. (Ed.), N.Y.-L., 1976. V. V. Kafarov, V. P. Meshalkin.

===

Spanish literature for the article "GRAPHS THEORY": no data

Page "GRAPHS THEORY" prepared based on materials

- Specialty of the Higher Attestation Commission of the Russian Federation02.00.03

- Number of pages 410

Contents of the dissertation Doctor of Chemical Sciences Vonchev, Danail Georgiev

Half a century ago, Paul Dirac expressed the opinion that, in principle, all chemistry is contained in the laws of quantum mechanics, but in reality, in practical calculations, insurmountable mathematical difficulties arise. Electronic computing technology has helped to shorten the distance between the possibilities and implementation of the quantum mechanical approach. However, calculations for molecules with a large number of electrons are complex and lacking in precision, and so far few molecular properties can be calculated in this way. On the other hand, in organic chemistry there are important structural problems that have not been fully resolved, and first of all, this is the problem of the relationship between the structure and properties of molecules. In theoretical chemistry there is a question of quantitative assessment of the main structural characteristics of molecules - their branching and cyclicity. This question is significant, since the quantitative analysis of general patterns in the structure of branched and cyclic molecules can, to a large extent, be transferred to their properties. In this way, it would be possible to predict the ordering of a group of isomeric compounds according to the values of properties such as stability, reactivity, spectral and thermodynamic properties, etc. This could facilitate the prediction of the properties of yet unsynthesized classes of compounds and the search for structures with predetermined properties. Despite significant efforts still remain open and the question of rational coding of chemical information for the purpose of its efficient storage and use with the help of a computer. An optimal solution to this issue would also have an impact on improving the classification and nomenclature of both organic compounds and the mechanisms of chemical reactions before the periodic theory. sys-4 tesh of chemical elements there is also a question about a holistic and quantitative interpretation of the periodicity of the properties of chemical elements based on quantities that reflect the electronic structure better than the atomic number of the element.

As a result, over the past decades, the development of new theoretical methods in chemistry, united under the name of mathematical chemistry, has been stimulated. The main place in it is occupied by topological methods, which reflect the most general structural and geometric properties of molecules. One of the branches of topology, graph theory, offers a mathematical language convenient for a chemist to describe a molecule, since structural formulas are essentially chemical graphs. The advantages that graph theory offers in chemical research are based on the possibility of direct application of its mathematical apparatus without the use of a computer, which is important for experimental chemists. Graph theory allows you to understand quite simply the structural characteristics of molecules. The results obtained are general and can be formulated in the form of theorems or rules and thus can find application for any similar chemical (and non-chemical) objects.

After the publication of fundamental works by Shannon and Wiener on information theory and cybernetics, interest in information-theoretical research methods has continuously increased. The original meaning of the term “information” is associated with information, messages and their transmission. This concept quickly went beyond the limits of communication theory and cybernetics and penetrated into various sciences about living and inanimate nature, society and cognition. The process of developing an information-theoretic approach in science is more complex than the formal transfer of the cybernetic category of information to other areas of knowledge. The information approach is not simply a translation from less common languages into a metalanguage. It offers a different perspective on systems and phenomena and allows one to obtain new results. By expanding connections between, well, different scientific disciplines, this method makes it possible to find useful analogies and general patterns between niches. Developing, modern science strives for an ever greater degree of generalization, for unity. In this regard, information theory is one of the most promising areas

An important place in this process is occupied by the application of information theory in chemistry and other natural sciences - physics, biology, etc. In these sciences, methods of information theory are used in the study and description of those properties of objects and processes that are associated with structure, orderliness, and organization systems “The usefulness of the information approach in chemistry lies primarily in the fact that it offers new possibilities for quantitative analysis of various aspects of chemical structures - atoms, molecules, crystals, etc. In these cases, ideas about “structural” information and “information content” of atoms and molecules.

In connection with the above, the main goal of the dissertation is to show the fruitfulness of the graph-theoretic and information-theoretic approach to structural problems in. chemistry, from atoms and molecules to polymers and crystals, achieving this goal involves the following as separate stages:

1. Determination of a system of quantities (information and topological indices; for the quantitative characterization of atoms, molecules, polymers and crystals.

2. Development on this basis of a new, more general approach to the question of the correlation between their properties, geometric and electronic structure. Prediction of the properties of some organic compounds, polymers and non-synthesized transactinide nMx elements.

Creation of methods for modeling crystal growth and crystal vacancies.

3. Generalized topological characterization of molecules by expressing the essence of their branching and cyclicity in a series of mathematically proven structural rules, and the study of the mapping of these rules to various molecular properties.

4. Creation of new, effective methods for coding chemical compounds and mechanisms of chemical reactions in connection with the improvement of their classification and nomenclature and especially in connection with the use of computers for processing chemical information.

CHAPTER 2. RESEARCH METHOD 2L. TEOREJO-INSTRUMENTATION METHOD 2.1.1" Introduction

Information is one of the most fundamental concepts in modern science, a concept no less general than the concepts of matter and Energy. This view finds justification in the very definitions of information. According to Wiener, “information is neither matter nor energy.”

Ashby views information as "a measure of the variety in a given system." According to Glushkov, “information is a measure of inhomogeneity in the distribution of space and time.” On this basis, today there is more and more awareness of the fact that in addition to the material and energetic nature, objects and phenomena in nature and technology also have informational properties. Some forecasts go further, predicting that the focus of scientific research will increasingly shift towards the information nature of processes, which will constitute the main object of research in the 21st century. These forecasts are essentially based on the possibility of optimal control of systems and processes through information, what exactly? is the main function of information in cybernetics. In the future, these ideas can lead to the creation of technologies in which every atom and molecule will be controlled by information, an opportunity that has so far found implementation only in living nature.

The emergence of information theory usually dates back to 1948, when Claude Shannon published his seminal work. The idea of information, however, as a quantity related to entropy, is much older. Back in 1894, Boltzmann established that any information obtained about a given system is associated with a decrease in the number of its possible states and, therefore, an increase in entropy means “loss of information.” In 1929

Szilard developed this idea to the general case of information in physics. her cp

Later, Vrillouin “generalized ideas about the connection between entropy and information in his negentropic principle in a form that also covers the information side of phenomena. Questions about the connection between information theory and thermodynamics, and, in particular, about the relationship between entropy and information, are still the subject of much attention (a detailed list of publications in this area is given in the review 58). Among the latest developments of the issue, Kobozev’s work on the thermodynamics of thinking should be especially noted, in which the thesis about the anti-entropic nature of thinking processes is substantiated.

Having emerged as a “special theory of communication,” the theory of information quickly outgrew its original limits and found application in various fields of science and technology: chemistry, biology, medicine, linguistics, psychology, aesthetics, etc. The role of information was first recognized in biology. Have important issues related to the storage, processing and transmission of information in living organisms, including the coding of genetic information 60-7, been resolved? assessment of the possibility of spontaneous generation of life on Earth^, formulation of the basic laws of biological thermodynamics^, analysis of bioenergy issues, etc. The information content of objects was used as a quantitative criterion

A A A evolution ". The question was raised about the informational nature of nutrition processes^®^^.

Information theory is still slowly penetrating chemistry and physics, although in recent years certain progress has been made in this area. The question has been raised about the possible existence of an information balance of chemical reactions. An assessment was made of the information capacity of bioorganic molecules and on this basis a new classification of these compounds was proposed, and the specificity of chemical reactions was assessed

Levin, Bernstein, and others applied information theory to molecular dynamics to describe the behavior of molecular systems that are far from equilibrium. The essence of this approach is the concept of "surprise", a deviation from what is expected based on the microcanonical distribution. Various applications have been proposed, including studying the performance characteristics of lasers, determining the branching ratio of competing reaction paths (taking as the most probable the path that corresponds to the maximum of the Shannon function), etc.

Dodel and his colleagues proposed to distribute the space occupied by a molecular system into a certain number of mutually exclusive subspaces called lodges. The best lodges containing localized groups of electrons are found by minimizing the information function. Sears et al.^ found a connection between quantum mechanical kinetic energies and information quantities. As a consequence of this result, the variational principle of quantum mechanics can be formulated as the principle of minimal information. op os

Kobozev and his colleagues linked the selectivity and activity of catalysts with their information content. They also formulated optimal information conditions for characterization and prediction of catalytic properties. Formation and growth of kris

Oh. rp oo talls were considered as an information process.” Rakov subjected information analysis to the treatment of catalyst surfaces with various chemical agents.

In modern analytical chemistry, the tendency to optimally conduct experiments in order to obtain maximum information from a minimum number of experiments is increasingly making its way.

These new ideas are based on information theory, game theory and systems theory. Other authors have applied information theory to minimize error and analysis time, to achieve higher selectivity, to evaluate the performance of analytical methods, etc. Research of this kind also includes physical methods in analytical chemistry, including gas chromatography^^^, atomic emission spectral analysis^, etc.

Information-theoretical methods have also proven useful in geochemistry for characterizing the distribution of chemical compounds in geochemical systems170, for assessing the degree of complexity and for classifying these systems

In engineering chemistry, through information analysis, problems of chemical technological systems can be solved, such as choosing optimal operating conditions, establishing control requirements, etc.101.

Examples of successful application of information theory in chemistry indicate once again that systems in nature and technology also have an informational nature. This also shows that the information approach acts as a universal language for describing systems, and, in particular, chemical structures of any type, to which it associates a certain information function and a numerical measure. It expands. field of possible applications of information theory in chemistry.

The usefulness of the information approach in chemistry is primarily that it offers the possibility of quantitative analysis of various aspects of chemical structures. The degree of complexity of these structures, their organization and specificity can be compared on a single quantitative scale. This allows us to study some of the most general properties of chemical structures, such as their branching and cyclicity, to study and compare the degree of organization in various classes of chemical compounds, the specificity of biologically active substances and catalysts, and allows us to approach the question of the degree of similarity and difference between two chemical objects.

The information approach is very suitable for solving personal classification problems. In these cases, it is possible to derive general information equations for the main groupings of classification objects (groups and periods in the Periodic Table of chemical elements, homologous series of chemical compounds, series of isomeric compounds, etc.)*

The great discriminating ability of information methods in relation to complex structures (isomers, isotopes, etc.) can be used in the computational processing and storage of chemical information. These methods are useful not only for choosing between different structures, but also between alternative hypotheses and approximations, which is interesting for quantum chemistry. The ability to develop new hypotheses based on information theory, however, is more limited, since this theory describes the mutual relationship between variables, but does not describe the behavior of any of them.

Problem. The relationship that exists between structure and properties is another area of successful application of the information-theoretic approach in chemistry. The effectiveness of this approach will be shown in the dissertation work for qualitatively different structural levels in chemistry - electronic shells of atoms, molecules, polymers, crystals and even atomic nuclei^»^. It can be implemented in both qualitative and quantitative aspects. In the first case, on an information basis, various structural rules can be defined, reflecting the mutual influence of two or more structural factors. It is also possible to obtain quantitative correlations between information indices and properties?®. At the same time, in principle, information indices provide better correlations compared to other indices, since they more fully reflect the features of chemical structures. Successful correlations are possible not only with quantities directly related to entropy, but also with quantities such as binding energy, whose connection with information is far from obvious. This includes properties of both an individual molecule or atom, but also of their large aggregates, i.e. properties that depend on the interaction between molecules and atoms, and not just on their internal structure. In addition, processes in chemistry can also be the subject of information analysis based on changes in information indices during interactions.

Some limitations of the information approach should also be kept in mind. Although they are a ton, quantitative measures of information are relative, not absolute. They are also statistical characteristics and relate to aggregates, but not to individual elements from them. Information indices can be defined for various properties of atoms and molecules, but the relationship between them is often complex and expressed in implicit form.

On the other hand, having multiple information indexes for one structure can cause mixed feelings. It should be remembered, however, that any of these indexes are legal. The real question here is which of these quantities are useful and to what extent.

In this chapter, information-theoretic indices were introduced for the first time: / characterizing the electronic structure of atoms, as well as new information indices on the symmetry, topology and electronic structure of molecules. The application of these structural characteristics is discussed in Chapter III, Sections IV.2 and V 1.

2.1.2. Necessary information from information theory

Information theory offers quantitative methods for studying the acquisition, storage, transmission, transformation and use of information. The main place in these methods is occupied by the quantitative measurement of information. The definition of the concept of quantity of information requires the rejection of widespread, but unclear ideas about information as a collection of facts, information, knowledge.

The concept of quantity of information is closely related to the concept of entropy as a measure of uncertainty. In 1923, Hartley characterized the uncertainty of an experiment with n different outcomes by the number ¿od p. In Shannon's statistical information theory, published in 1948, the amount of information is determined through the concept of probability. It is known that this concept is used to describe a situation in which there is uncertainty associated with the choice of one or more elements (outcomes) from a certain set. Following Shannon, a measure of the uncertainty of the outcome X/ experience X with probability p(X¡) -¿Oy(X)). A measure of the average uncertainty of a complete experiment X with Xt, X2, ♦ possible outcomes, with probabilities^ respectively, p(X4), p(X2),. chp(Xn), is the quantity

Н(х) = - рсх,) Log p(Xi) сл>

In statistical information theory, H(X) is called the entropy of the probability distribution. The latter, in the case of /7 different outcomes, form a finite probabilistic scheme, i.e.

The concept of probability can be defined in a more general way from the point of view of set theory. Let a finite set be a partition of A into a /T) class in which /\ are disjoint sets; by some equivalence relation X * Set of equivalence classes

R/X = (2.2; called the factor set of R by X

The Kolmogorov probabilistic function (probabilistic correspondence) p is subject to three conditions:

The number series PfXf) , Р(Х2) , ., Р(ХГГ)) is called the distribution of the partition А, and the Shannon function Н(X) from equation (2.1) expresses the entropy of the partition X

It should be borne in mind that the concept of entropy in information theory is more general than thermodynamic entropy. The latter, considered as a measure of disorder in atomic-molecular movements, is a special case of the more general concept of entro-! pii - the measure of any disorder or uncertainty, or diversity.

The amount of information X is expressed by the amount of detached uncertainty. Then the average entropy of a given event with many possible outcomes is equal to the average amount of information required to select any event X from the set ^ ,X^,. and is determined by Shannon’s formula (e 2.1):

I(x) = -K$Lp(x,-)logp(K) = Hw

Here K is a positive constant that defines the units of information measurement. Assuming K = 4, entropy (respectively, information) is measured in decimal units. The most convenient measurement system is based on binary units (bits). In this case, K ~ U2 and logarithm vur-i (2.4) is taken at the base two and \-! is denoted for brevity by. One binary unit of information (or 1 bit) is the amount of information that is obtained when the result of a choice between two equally probable possibilities becomes known, and in units of entropy,¿ .dgasG\ conversion factor is the Voltzmann constant (1.38.10 yj.gra.d~divided by /a?Yu.

It has been proven that the choice of a logarithmic function for the amount of information is not random, and this is the only function that satisfies the conditions of activity and non-negativity of information

Both single and average information are always positive. This property is related to the fact that the probability is always less than one, and the constant in equation (2.4) is always taken to be positive. E|&since ^ Y, then

13 р(х,-) = Н(х,о с2.5) and this inequality remains after averaging.

The average amount of information for a given event (experience) X reaches a maximum with a uniform probability distribution p(X,) -p(X2)= . . .=p(Xn)* i.e. at p(X)) for any P:

For a pair of random dependent events X and y, the average amount of information is also expressed by Shannon’s formula:

1(xy> = - p(x,yj) No. pix, yj) (2.7)

Equation (2.7) can be generalized for any finite set, regardless of the nature of its elements:

1(xy) = -Z Z. P(X,nYj) 16P(X-,nYj) (2.8) are two factor sets of P according to two different equivalence relations x and y, and K/xy is a factor set of sections of X; And:

Having written the joint probability in equation (2.7) as the product of the unconditional and conditional probability p(x;,y^ = p(><¡)"P(Уj/x¡) , и представив логарифм в виде сумш»получается уравнение:

1(Xy) = 1(X) h- 1(y/X) (2.9) in which T(x/y) is the average amount of conditional information contained in y relative to x, and is given by the expression:

1(y/X) = -U p(X,y1) 1B p(Y;/X-,) (2.10)

Defining a function:

1(X,y.! = 1(Y> - 1(y/X) (2-Ш and replacing it in equation (2.9):

1(xy) - 1(X) + 1(y) -1(x,y) (2.12) it becomes clear that T(X,y) expresses the deviation of information about a complex event (X,y") from the additivity of information about individual events (outcomes): x and y. Therefore, G(X,Y) is a measure of the degree of statistical dependence (connection) between X and y. The equality is also valid: 1(X,Y) - 1(yx) (2.13) which characterizes the connection between X and y3 is symmetrical.

In the general case, for the statistical connection between x and y and the average amount of unconditional information regarding X or y, the following inequalities are valid: m!1(x>

Equality is valid when the second term in equation (2.11) is zero, i.e. when each / corresponds to I, for which p(y. ¡X))=

If the quantities X and y are independent, i.e. if in equation (2.12) T(X,y) =0, then

1(xy) =1(X)<2Л5>

This equation expresses the property of additivity of the amount of information and is generalized for independent random variables. comes to this:

1(x„x2,.,xn) = 11 1(x/) (2.16)

Non-probabilistic approaches to the quantitative determination of information are also known. Ingarden and Urbanikh proposed the axioshtichesnov/sizvdedeniye-Schein information without probabilities, in the form of a function of finite Boolean rings. Of considerable interest is the epsilon-entropy proposed by Kolmogorov^^ (combinatorial approach) and especially the algorithmic determination of the amount of information. According to Kolmogorov, the amount of information contained in one object (set) relative to another object (set) is considered as the “minimum length” of programs, written as a sequence of zeros and ones, and allowing one-to-one transformation; the first object in the second:: = N(X/y) = Ш "Ш I (Р) (2-17)

Kolmogorov's algorithmic approach offers new

17 logical foundations of information theory based on the concepts of complexity and sequence, and the concepts of “entropy” and “amount of information” turned out to be applicable to individual objects.

Non-probabilistic methods in information theory expand the content of the concept of the amount of information from the amount of reduced uncertainty to the amount of reduced uniformity or to the amount of diversity in accordance with Ashby's interpretation. Any set of probabilities, normalized to unity, can be considered corresponding to a certain set of elements, enjoying diversity. By diversity is meant a characteristic of the elements of a set, which consists in their difference, discrepancy in relation to some relation of equivalence. This can be a collection of various elements, connections, relationships, properties of objects. The smallest unit of information, a bit, with this approach expresses the minimum difference, i.e. the difference between two objects that are not identical, differ in some properties.

In this aspect, information-theoretic methods are applicable to the determination of the so-called. structural information “the amount of information contained in the structure of a given system. Here, structure means any finite set, the elements of which are distributed among subsets (equivalence classes) depending on a certain equivalence relation.

Let this structure contain A/ elements and they are distributed according to some equivalence criterion in subsets of equivalent elements: . This distribution corresponds to a finite probabilistic scheme of the probability subset ^ pn p2> . . ?RP elements

2.18) where ¿Г -Л/" and is the probability of one (randomly) selected element to fall into / - that subset that has A/,-elements. The entropy H of the probability distribution of the elements of this structure, determined by equation (2.4), can be considered / as a measure of the average amount of information, I, contained in one element of the structure: - n

1u Р/, bits per element (2.19)

The general information content of the structure is given by the derivative equation (2.19):

1-M1-A//0/h-khnmm,<*.»>

There is no consensus in the literature on how to name the quantities defined by y (2.19) and (2.20). Some authors prefer to call them average and general information content, respectively. Thus, according to Mouschowitz, I is not a measure of entropy in the sense in which it is used in information theory, nor does it express the average uncertainty of a structure consisting of /\/ elements in the ensemble of all possible structures having the same: number of elements. I is rather the information content of the structure under consideration in relation to the system of transformations that leave the system invariant. According to Reshr from equation (2.4), it measures the amount of information after the experiment, and before it, H(x) is a measure of entropy associated with the uncertainty of the experiment. In our opinion, the "experiment" that reduces the uncertainty of chemical structures (atoms, molecules, etc.) is the "process of forming these structures from their unrelated elements. The information is here in a connected form, it is contained in the structure, and therefore often the term "information content" of the structure is used.

The concept of structural information based on the above interpretation1 of equations (2.19) and (2.20) fits well with Ashby's ideas about the amount of information as the amount of variety. When a system consists of identical elements, there is no diversity in it. In this case, in y-yah (2.19) and (2.20)/="/

With the maximum variety of elements in the structure, A £ = / and the information content of the structure is maximum:

4 «* -N16 and, T^^vI

2.1.3. Information-theoretic indices for the characteristics of the electronic structure of atoms of chemical elements

Recommended list of dissertations in the specialty "Organic Chemistry", 02.00.03 code VAK

Asymptotic problems of combinatorial coding theory and information theory 2001, Candidate of Physical and Mathematical Sciences Vilenkin, Pavel Aleksandrovich2011, Candidate of Physical and Mathematical Sciences Shutkin, Yuri Sergeevich

Please note that the scientific texts presented above are posted for informational purposes only and were obtained through original dissertation text recognition (OCR). In this connection, they may contain errors associated with imperfect recognition algorithms. There are no such errors in the PDF files of dissertations and abstracts that we deliver.