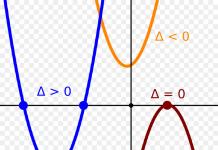

If we choose any two mutually perpendicular vectors of unit length on a plane (Fig. 7), then an arbitrary vector in the same plane can be expanded in the directions of these two vectors, i.e., represented in the form

![]()

where are numbers equal to the projections of the vector onto the directions of the axes. Since the projection onto the axis is equal to the product of the length and the cosine of the angle with the axis, then, recalling the definition of the scalar product, we can write

Similarly, if in three-dimensional space we choose any three mutually perpendicular vectors of unit length, then an arbitrary vector in this space can be represented as

In a Hilbert space, one can also consider systems of pairwise orthogonal vectors of this space, i.e., functions

Such systems of functions are called orthogonal systems of functions and play an important role in analysis. They are found in a wide variety of questions of mathematical physics, integral equations, approximate calculations, theory of functions of a real variable, etc. The ordering and unification of concepts related to such systems was one of the incentives that led at the beginning of the 20th century. towards the creation of a general concept of Hilbert space.

Let's give precise definitions. Function system

is called orthogonal if any two functions of this system are orthogonal to each other, i.e. if

In three-dimensional space, we required that the lengths of the system vectors be equal to one. Recalling the definition of vector length, we see that in the case of a Hilbert space this requirement is written as follows:

A system of functions that satisfies requirements (13) and (14) is called orthogonal and normalized.

Let us give examples of such systems of functions.

1. On the interval, consider the sequence of functions

Every two functions from this sequence are orthogonal to each other. This can be verified by simply calculating the corresponding integrals. The square of the length of a vector in a Hilbert space is the integral of the square of the function. Thus, the squared lengths of the sequence vectors

the essence of integrals

i.e. the sequence of our vectors is orthogonal, but not normalized. The length of the first vector of the sequence is equal to

the rest have length . By dividing each vector by its length, we obtain an orthogonal and normalized system of trigonometric functions

This system is historically one of the first and most important examples of orthogonal systems. It arose in the works of Euler, D. Bernoulli, and d'Alembert in connection with the problem of string vibrations. Her study played a significant role in the development of the entire analysis.

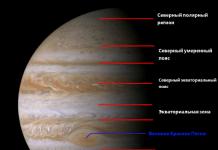

The appearance of an orthogonal system of trigonometric functions in connection with the problem of string vibrations is not accidental. Each problem about small oscillations of a medium leads to a certain system of orthogonal functions that describe the so-called natural oscillations of a given system (see § 4). For example, in connection with the problem of oscillations of a sphere, so-called spherical functions appear, in connection with the problem of oscillations of a round membrane or cylinder, so-called cylindrical functions appear, etc.

2. You can give an example of an orthogonal system of functions, each function of which is a polynomial. Such an example is the sequence of Legendre polynomials

![]()

i.e. there is (up to a constant factor) the order derivative of . Let's write down the first few polynomials of this sequence:

It is obvious that in general there is a polynomial of degree. We leave it to the reader to see for himself that these polynomials represent an orthogonal sequence on the interval

The general theory of orthogonal polynomials (the so-called orthogonal polynomials with weight) was developed by the remarkable Russian mathematician P. L. Chebyshev in the second half of the 19th century.

Expansion in orthogonal systems of functions. Just as in three-dimensional space each vector can be represented

as a linear combination of three pairwise orthogonal vectors of unit length

in a function space, the problem arises of expanding an arbitrary function into a series in an orthogonal and normalized system of functions, i.e., representing the function in the form

In this case, the convergence of series (15) to a function is understood in the sense of the distance between elements in the Hilbert space. This means that the root mean square deviation of the partial sum of the series from the function tends to zero as , i.e.

This convergence is usually called “convergence on average.”

Expansions in terms of certain systems of orthogonal functions are often found in analysis and are an important method for solving problems of mathematical physics. So, for example, if an orthogonal system is a system of trigonometric functions on the interval

then such an expansion is the classical expansion of a function in a trigonometric series

Let us assume that expansion (15) is possible for any function from the Hilbert space and find the coefficients of such expansion. To do this, we multiply both sides of the equality scalarly by the same function of our system. We will get equality

from which, due to the fact that when the value of the coefficient is determined

We see that, as in ordinary three-dimensional space (see the beginning of this section), the coefficients are equal to the projections of the vector onto the directions of the vectors.

Recalling the definition of the scalar product, we find that the coefficients of the expansion of a function in an orthogonal and normalized system of functions

determined by formulas

As an example, consider the orthogonal normalized trigonometric system of functions given above:

We have obtained a formula for calculating the coefficients of the expansion of a function into a trigonometric series, assuming, of course, that this expansion is possible.

We have established the form of coefficients of expansion (18) of a function in an orthogonal system of functions under the assumption that such an expansion takes place. However, an infinite orthogonal system of functions may not be sufficient for it to be possible to expand any function from a Hilbert space. For such an expansion to be possible, the system of orthogonal functions must satisfy an additional condition - the so-called completeness condition.

An orthogonal system of functions is called complete if it is impossible to add to it a single non-identically zero function orthogonal to all functions of the system.

It is easy to give an example of an incomplete orthogonal system. To do this, let’s take some orthogonal system, for example the same

system of trigonometric functions, and eliminate one of the functions of this system, for example, Remaining infinite system of functions

will still be orthogonal, of course, it will not be complete, since the function we excluded is orthogonal to all functions of the system.

If a system of functions is not complete, then not every function from a Hilbert space can be expanded over it. Indeed, if we try to expand in such a system a zero function orthogonal to all functions of the system, then, by virtue of formulas (18), all coefficients will be equal to zero, while the function is not equal to zero.

The following theorem holds: if a complete orthogonal and normalized system of functions in a Hilbert space is given, then any function can be expanded into a series in terms of the functions of this system

In this case, the expansion coefficients are equal to the projections of the vectors onto the elements of the orthogonal normalized system

The Pythagorean theorem in § 2 in Hilbert space allows us to find an interesting relationship between the coefficients and the function. Let us denote by the difference between and the sum of the first terms of its series, i.e.

What are we talking about?

The appearance of a post on Habré about the Majvik filter was in its own way a symbolic event. Apparently, the general fascination with drones has revived interest in the problem of estimating body orientation from inertial measurements. At the same time, traditional methods based on the Kalman filter have ceased to satisfy the public, either due to high computational requirements that are unacceptable for drones, or due to complex and unintuitive parameter settings.

The post was accompanied by a very compact and efficient implementation of the filter in C. However, judging by the comments, the physical meaning of this code, as well as the entire article, remained vague for some. Well, let's face it: the Majwick filter is the most intricate of a group of filters based on generally very simple and elegant principles. I will discuss these principles in my post. There will be no code here. My post is not a story about any specific implementation of an orientation estimation algorithm, but rather an invitation to invent your own variations on a given theme, of which there can be a lot.

Orientation view

Let's remember the basics. To evaluate the orientation of a body in space, you first need to select some parameters that together uniquely determine this orientation, i.e. essentially the orientation of the associated coordinate system relative to a conditionally fixed system - for example, the NED (North, East, Down) geographic system. Then you need to create kinematic equations, i.e. express the rate of change of these parameters through the angular velocity from the gyroscopes. Finally, vector measurements from accelerometers, magnetometers, etc. need to be factored into the calculation. Here are the most common ways to represent orientation:

Euler angles- roll (roll, ), pitch (pitch, ), course (heading, ). This is the most visual and most concise set of orientation parameters: the number of parameters is exactly equal to the number of rotational degrees of freedom. For these angles we can write Euler's kinematic equations. They are very popular in theoretical mechanics, but they are of little use in navigation problems. Firstly, knowing the angles does not allow you to directly convert the components of any vector from a related one to a geographic coordinate system or vice versa. Secondly, at a pitch of ±90 degrees, the kinematic equations degenerate, the roll and heading become uncertain.

Rotation matrix- a 3x3 matrix by which any vector in the associated coordinate system must be multiplied to obtain the same vector in the geographic system: . The matrix is always orthogonal, i.e. . The kinematic equation for it has the form .

Here is a matrix of angular velocity components measured by gyroscopes in a coupled coordinate system:

The rotation matrix is a little less visual than Euler angles, but unlike them, it allows you to directly transform vectors and does not become meaningless at any angular position. From a computational point of view, its main drawback is redundancy: for the sake of three degrees of freedom, nine parameters are introduced at once, and all of them need to be updated according to the kinematic equation. The problem can be slightly simplified by taking advantage of the orthogonality of the matrix.

Rotation quaternion- a radical, but very unintuitive remedy against redundancy and degeneration. It is a four-component object - not a number, not a vector, not a matrix. You can look at a quaternion from two angles. Firstly, as a formal sum of a scalar and a vector, where are the unit vectors of the axes (which, of course, sounds absurd). Secondly, as a generalization of complex numbers, where now not one, but three are used different imaginary units (which sounds no less absurd). How is a quaternion related to rotation? Through Euler's theorem: a body can always be transferred from one given orientation to another by one final rotation through a certain angle around a certain axis with a direction vector. These angle and axis can be combined into a quaternion: . Like a matrix, a quaternion can be used to directly transform any vector from one coordinate system to another: . As you can see, the quaternion representation of orientation also suffers from redundancy, but much less than the matrix representation: there is only one extra parameter. A detailed review of quaternions has already been published on Habré. There was talk about geometry and 3D graphics. We are also interested in kinematics, since the rate of change of the quaternion needs to be related to the measured angular velocity. The corresponding kinematic equation has the form , where the vector is also considered a quaternion with a zero scalar part.

Filter circuits

The most naive approach to calculating orientation is to arm ourselves with a kinematic equation and update any set of parameters we like in accordance with it. For example, if we have chosen a rotation matrix, we can write a loop with something like C += C * Omega * dt . The result will be disappointing. Gyroscopes, especially MEMS, have large and unstable zero offsets - as a result, even at complete rest, the calculated orientation will have an indefinitely accumulating error (drift). All the tricks invented by Mahoney, Majwick and many others, including myself, were aimed at compensating for this drift by involving measurements from accelerometers, magnetometers, GNSS receivers, logs, etc. This is how a whole family of orientation filters was born, based on a simple basic principle.

Basic principle. To compensate for orientation drift, it is necessary to add to the angular velocity measured by gyroscopes an additional control angular velocity, constructed on the basis of vector measurements of other sensors. The control angular velocity vector must strive to combine the directions of the measured vectors with their known true directions.

This involves a completely different approach than the construction of the correction term of the Kalman filter. The main difference is that the control angular velocity is not a term, but a multiplier at the estimated value (matrix or quaternion). This leads to important advantages:

- The estimating filter can be built for the orientation itself, and not for small deviations of the orientation from the one given by the gyroscopes. In this case, the estimated quantities will automatically satisfy all the requirements imposed by the problem: the matrix will be orthogonal, the quaternion will be normalized.

- The physical meaning of the control angular velocity is much clearer than the correction term in the Kalman filter. All manipulations are done with vectors and matrices in ordinary three-dimensional physical space, and not in abstract multi-dimensional state space. This significantly simplifies the refinement and configuration of the filter, and as a bonus, it allows you to get rid of high-dimensional matrices and heavy matrix libraries.

Now let's see how this idea is implemented in specific filter options.

Mahoney filter. All the mind-boggling mathematics of Mahoney’s original paper was written to justify simple equations (32). Let's rewrite them in our notation. If we ignore the estimation of the gyroscope zero displacements, then two key equations remain - the actual kinematic equation for the rotation matrix (with the controlling angular velocity in the form of a matrix) and the law of formation of this very speed in the form of a vector. Let us assume for simplicity that there are no accelerations or magnetic interference, and thanks to this, we have access to measurements of the acceleration of gravity from accelerometers and the strength of the Earth’s magnetic field from magnetometers. Both vectors are measured by sensors in a related coordinate system, and in the geographic system their position is known: directed upward, towards magnetic north. Then the Mahoney filter equations will look like this:

Let's look closely at the second equation. The first term on the right side is the cross product. The first factor in it is the measured acceleration of gravity, the second is the true one. Since the multipliers must be in the same coordinate system, the second multiplier is converted to a related system by multiplying by . Angular velocity, constructed as a cross product, is perpendicular to the plane of the factor vectors. It allows you to rotate the calculated position of the associated coordinate system until the multiplier vectors coincide in direction - then the vector product will be reset to zero and the rotation will stop. The coefficient specifies the severity of such feedback. The second term performs a similar operation with the magnetic vector. In essence, the Mahoney filter embodies a well-known thesis: knowledge of two non-collinear vectors in two different coordinate systems allows one to unambiguously restore the mutual orientation of these systems. If there are more than two vectors, this will provide useful measurement redundancy. If there is only one vector, then one rotational degree of freedom (motion around this vector) cannot be fixed. For example, if only the vector is given, then the roll and pitch drift can be corrected, but not the heading drift.

Of course, it is not necessary to use a rotation matrix in the Mahoney filter. There are also non-canonical quaternion variants.

Virtual gyroplatform. In the Mahoney filter, we applied a control angular velocity to the associated coordinate system. But you can also apply it to the calculated position of the geographic coordinate system. The kinematic equation will then take the form

It turns out that this approach opens the way to very fruitful physical analogies. It is enough to remember where gyroscopic technology began - heading and inertial navigation systems based on a gyro-stabilized platform in a gimbal suspension.

www.theairlinepilots.com

The task of the platform there was to materialize the geographic coordinate system. The orientation of the carrier was measured relative to this platform by angle sensors on the gimbal frames. If the gyroscopes drifted, then the platform drifted along with them, and errors accumulated in the readings of the angle sensors. To eliminate these errors, feedback was introduced from accelerometers installed on the platform. For example, the deviation of the platform from the horizon around the northern axis was perceived by the accelerometer of the eastern axis. This signal made it possible to set the control angular velocity, returning the platform to the horizon.

We can use the same visual concepts in our task. The written kinematic equation should then be read as follows: the rate of change in orientation is the difference between two rotational movements - the absolute movement of the carrier (the first term) and the absolute movement of the virtual gyroplatform (the second term). The analogy can be extended to the law of formation of the control angular velocity. The vector represents the readings of accelerometers supposedly located on the gyroplatform. Then from physical considerations we can write:

One could arrive at exactly the same result in a formal way, by doing vector multiplication in the spirit of the Mahony filter, but now not in a connected, but in a geographic coordinate system. Is this really necessary?

The first hint of a useful analogy between platform and strapdown inertial navigation appears to appear in an ancient Boeing patent. Then this idea was actively developed by Salychev, and more recently by me too. Obvious advantages of this approach:

- The control angular velocity can be formed on the basis of understandable physical principles.

- Naturally, the horizontal and heading channels are separated, very different in their properties and methods of correction. In the Mahoney filter they are mixed.

- It is convenient to compensate for the impact of accelerations by using GNSS data, which is provided precisely in geographical rather than related axes.

- It is easy to generalize the algorithm to the case of high-precision inertial navigation, where the shape and rotation of the Earth must be taken into account. I have no idea how to do this in Mahoney’s scheme.

Majvik filter. Majwick chose the difficult path. If Mahoney, apparently, intuitively came to his decision, and then justified it mathematically, then Majwick showed himself to be a formalist from the very beginning. He took on the optimization problem. He reasoned this way. Let's set the orientation by the rotation quaternion. In an ideal case, the calculated direction of some measured vector (let us have it) coincides with the true one. Then it will be. In reality, this is not always achievable (especially if there are more than two vectors), but you can try to minimize the deviation from exact equality. To do this, we introduce a minimization criterion

Minimization requires gradient descent - movement in small steps in the direction opposite to the gradient, i.e. opposite to the fastest increase in function. By the way, Majvik makes a mistake: in all his works he does not enter at all and persistently writes instead of , although he actually calculates exactly .

Gradient descent ultimately leads to the following condition: to compensate for orientation drift, you need to add a new negative term proportional to the rate of change of the quaternion from the kinematic equation:

Here Majwick deviates a little from our “basic principle”: he adds a correction term not to the angular velocity, but to the rate of change of the quaternion, and this is not exactly the same thing. As a result, it may turn out that the updated quaternion will no longer be a unit and, accordingly, will lose the ability to represent orientation. Therefore, for the Majwick filter, artificial normalization of the quaternion is a vital operation, while for other filters it is desirable, not optional.

Impact of accelerations

Until now, it was assumed that there are no true accelerations and accelerometers measure only the acceleration due to gravity. This made it possible to obtain a vertical reference and use it to compensate for roll and pitch drift. However, in general, accelerometers, regardless of their operating principle, measure apparent acceleration- vector difference between true acceleration and free fall acceleration. The direction of the apparent acceleration does not coincide with the vertical, and errors caused by the accelerations appear in the roll and pitch estimates.

This can be easily illustrated using the analogy of a virtual gyroscope. Its correction system is designed so that the platform stops in the angular position in which the signals of the accelerometers supposedly installed on it are reset, i.e. when the measured vector becomes perpendicular to the sensitivity axes of the accelerometers. If there are no accelerations, this position coincides with the horizon. When horizontal accelerations occur, the gyroplatform deflects. We can say that the gyroplatform is similar to a heavily damped pendulum or plumb line.

In the comments to the post about the Majwick filter, there was a question about whether we can hope that this filter is less susceptible to accelerations than, for example, the Mahoney filter. Unfortunately, all the filters described here exploit the same physical principles and therefore suffer from the same problems. You cannot fool physics with mathematics. What to do then?

The simplest and crudest method was invented back in the middle of the last century for aviation gyrometers: to reduce or completely reset the control angular velocity in the presence of accelerations or angular velocity of the course (which indicates entering a turn). The same method can be transferred to current platformless systems. In this case, accelerations must be judged by the values of , and not , which are themselves zero in the turn. However, in magnitude it is not always possible to distinguish true accelerations from projections of gravity acceleration, caused by the very tilt of the gyroplatform that needs to be eliminated. Therefore, the method does not work reliably, but does not require any additional sensors.

A more accurate method is based on the use of external speed measurements from a GNSS receiver. If the speed is known, then it can be numerically differentiated and the true acceleration can be obtained. Then the difference will be exactly equal regardless of the movement of the carrier. It can be used as a vertical standard. For example, you can set the control angular velocities of the gyroplatform in the form

Sensor zero offsets

A sad feature of consumer-grade gyroscopes and accelerometers is the large instability of zero offsets in time and temperature. To eliminate them, factory or laboratory calibration alone is not enough - additional evaluation is required during operation.

Gyroscopes. Let's deal with the zero offsets of gyroscopes. The calculated position of the associated coordinate system moves away from its true position with an angular velocity determined by two opposing factors - the zero displacement of the gyroscopes and the control angular velocity: . If the correction system (for example, in the Mahoney filter) managed to stop the drift, then the steady state will be . In other words, the control angular velocity contains information about the unknown acting disturbance. Therefore you can apply compensatory assessment: We do not know the magnitude of the disturbance directly, but we know what corrective action is needed to balance it. This is the basis for estimating the zero offsets of the gyroscopes. For example, Mahoney's score is updated by law

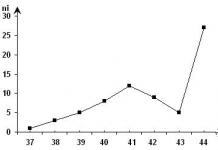

However, his results are strange: estimates reach 0.04 rad/s. Such instability of zero offsets does not occur even with the worst gyroscopes. I suspect the problem is due to the fact that Mahoney does not use GNSS or other external sensors - and suffers fully from the effects of accelerations. Only on the vertical axis, where accelerations do not harm, the estimate looks more or less reasonable:

Mahony et al., 2008

Accelerometers. Estimating accelerometer zero offsets is much more difficult. Information about them has to be extracted from the same control angular velocity. However, in rectilinear motion, the effect of zero shifts of the accelerometers is indistinguishable from the tilt of the carrier or the skew of the installation of the sensor unit on it. No additives are created for accelerometers. The additive appears only when turning, which makes it possible to separate and independently evaluate the errors of gyroscopes and accelerometers. An example of how this can be done is in my article. Here are the pictures from there:

Instead of a conclusion: what about the Kalman filter?

I have no doubt that the filters described here will almost always have an advantage over the traditional Kalman filter in terms of speed, compactness of code and ease of configuration - this is what they were created for. As for the accuracy of assessment, everything is not so clear here. I have come across poorly designed Kalman filters, which were noticeably inferior in accuracy to a filter with a virtual gyroplatform. Majwick also proved the benefits of his filter in relation to some Kalman estimates. However, for the same problem of orientation estimation, it is possible to construct at least a dozen different Kalman filter circuits, and each will have an infinite number of configuration options. I have no reason to think that the Mahoney or Majwick filter will be more accurate the best possible Kalman filters. And of course, the Kalman approach will always have the advantage of universality: it does not impose any strict restrictions on the specific dynamic properties of the system being evaluated.

Definition. Vectorsa Andb are called orthogonal (perpendicular) to each other if their scalar product is equal to zero, i.e.a × b = 0.

For non-zero vectors a And b the equality of the scalar product to zero means that cos j= 0, i.e. . The zero vector is orthogonal to any vector, because a × 0 = 0.

Exercise. Let and be orthogonal vectors. Then it is natural to consider the diagonal of a rectangle with sides and . Prove that

those. the square of the length of the diagonal of a rectangle is equal to the sum of the squares of the lengths of its two non-parallel sides(Pythagorean theorem).

Definition. Vector systema 1 ,…, a m is called orthogonal if any two vectors of this system are orthogonal.

Thus, for an orthogonal system of vectors a 1 ,…,a m the equality is true: a i × a j= 0 at i¹ j, i= 1,…, m; j= 1,…,m.

Theorem 1.5. An orthogonal system consisting of nonzero vectors is linearly independent. .

□ We carry out the proof by contradiction. Suppose that the orthogonal system of nonzero vectors a 1 , …, a m linearly dependent. Then

l 1 a 1 + …+ l ma m= 0 , at the same time. (1.15)

Let, for example, l 1 ¹ 0. Multiply by a 1 both sides of equality (1.15):

l 1 a 1× a 1 + …+ l m a m × a 1 = 0.

All terms except the first are equal to zero due to the orthogonality of the system a 1 , …, a m. Then l 1 a 1× a 1 =0, which follows a 1 = 0 , which contradicts the condition. Our assumption turned out to be wrong. This means that the orthogonal system of nonzero vectors is linearly independent. ■

The following theorem holds.

Theorem 1.6. In the space Rn there is always a basis consisting of orthogonal vectors (orthogonal basis)

(no proof).

Orthogonal bases are convenient primarily because the expansion coefficients of an arbitrary vector over such bases are simply determined.

Suppose we need to find the decomposition of an arbitrary vector b on an orthogonal basis e 1 ,…,e n. Let’s compose an expansion of this vector with still unknown expansion coefficients for this basis:

Let's multiply both sides of this equality scalarly by the vector e 1. By virtue of axioms 2° and 3° of the scalar product of vectors, we obtain

Since the basis vectors e 1 ,…,e n are mutually orthogonal, then all scalar products of the basis vectors, with the exception of the first, are equal to zero, i.e. the coefficient is determined by the formula

Multiplying equality (1.16) one by one by other basis vectors, we obtain simple formulas for calculating the vector expansion coefficients b :

Formulas (1.17) make sense because .

Definition. Vectora is called normalized (or unit) if its length is equal to 1, i.e. (a , a )= 1.

Any nonzero vector can be normalized. Let a ¹ 0 . Then , and the vector is a normalized vector.

Definition. Vector system e 1 ,…,e n is called orthonormal if it is orthogonal and the length of each vector of the system is equal to 1, i.e.

Since there is always an orthogonal basis in the space Rn and the vectors of this basis can be normalized, then there is always an orthonormal basis in Rn.

An example of an orthonormal basis of the space R n is the system of vectors e 1 ,=(1,0,…,0),…, e n=(0,0,…,1) with the scalar product defined by equality (1.9). In an orthonormal basis e 1 ,=(1,0,…,0),…, e n=(0,0,…,1) formula (1.17) to determine the coordinates of the vector decomposition b have the simplest form:

Let a And b – two arbitrary vectors of the space R n with an orthonormal basis e 1 ,=(1,0,…,0),…, e n=(0,0,…,1). Let us denote the coordinates of the vectors a And b in the basis e 1 ,…,e n accordingly through a 1 ,…,a n And b 1 ,…, b n and find the expression for the scalar product of these vectors through their coordinates in this basis, i.e. suppose that

From the last equality, by virtue of the scalar product axioms and relations (1.18), we obtain

Finally we have

Thus, in an orthonormal basis, the scalar product of any two vectors is equal to the sum of the products of the corresponding coordinates of these vectors.

Let us now consider a completely arbitrary (generally speaking, not orthonormal) basis in the n-dimensional Euclidean space R n and find an expression for the scalar product of two arbitrary vectors a And b through the coordinates of these vectors in the specified basis. f 1 ,…,f n Euclidean space R n, the scalar product of any two vectors is equal to the sum of the products of the corresponding coordinates of these vectors, it is necessary and sufficient that the basis f 1 ,…,f n was orthonormal.

In fact, expression (1.20) goes into (1.19) if and only if the relations establishing the orthonormality of the basis are satisfied f 1 ,…,f n.

1) O. such that (x a , x ab)=0 at . If the norm of each vector is equal to one, then the system (x a) is called. orthonormal. Full O. s. (x a) called orthogonal (orthonormal) basis. M. I. Voitsekhovsky.

2) O. s. coordinates - a coordinate system in which coordinate lines (or surfaces) intersect at right angles. O. s. coordinates exist in any Euclidean space, but, generally speaking, do not exist in any space. In a two-dimensional smooth affine space O. s. can always be introduced at least in a sufficiently small neighborhood of each point. Sometimes it is possible to introduce O. s. coordinates in action. In O. s. metric tensor g ij diagonals; diagonal components gii accepted name Lamé coefficients. Lame coefficient O. s. in space are expressed by formulas

Where x, y And z- Cartesian rectangular coordinates. The element of length is expressed through the Lamé coefficients:

surface area element:

volume element:

vector differential operations:

The most frequently used O. s. coordinates: on the plane - Cartesian, polar, elliptical, parabolic; in space - spherical, cylindrical, paraboloidal, bicylindrical, bipolar. D. D. Sokolov.

3) O. s. functions - finite or countable system (j i(x)) functions belonging to the space

L 2(X, S, m) and satisfying the conditions

If l i=1 for all i, then the system is called orthonormal. It is assumed that the measure m(x), defined on the s-algebra S of subsets of the set X, is countably additive, complete, and has a countable base. This is the definition of O. s. includes all O. pages considered in modern analysis; they are obtained for various specific implementations of measure space ( X, S, m).

Of greatest interest are complete orthonormal systems (j n(x)), which have the property that for any function there is a unique series converging to f(x) in the metric of the space L 2(X, S, m) ,

while the coefficients s p are determined by the Fourier formulas

![]()

Such systems exist due to the separability of space L 2(X, S, m). A universal way to construct complete orthonormal systems is provided by the Schmidt orthogonalization method. To do this, it is enough to apply it to a certain swarm of complete L 2(S, X, m) a system of linearly independent functions.

In theory orthogonal series in mainly considered O. s. spaceLva L 2[a, b](that special case when X=[a, b], S- system of Lebesgue measurable sets, and m is the Lebesgue measure). Many theorems on the convergence or summability of series , , according to general mathematical systems. (j n(x)) spaces L 2[a, b] are also true for series in orthonormal systems of space L 2(X, S, m). At the same time, in this particular case, interesting concrete O. systems have been constructed that have certain good properties. Such are, for example, the systems of Haar, Rademacher, Walsh-Paley, and Franklin.

1) Haar system

where m=2 n+k, , t=2, 3, ... . Haar series represent a typical example martingales and for them the general theorems from the theory of martingales are true. In addition, the system is the basis in Lp, , and the Fourier series in the Haar system of any integrable function converges almost everywhere.

2) Rademacher system

represents an important example of O. s. independent functions and has applications both in probability theory and in the theory of orthogonal and general functional series.

3) Walsh-Paley system ![]() is determined through the Rademacher functions:

is determined through the Rademacher functions:

where are the ti numbers q k are determined from the binary expansion of the number n:

![]()

4) The Franklin system is obtained by orthogonalizing the sequence of functions using the Schmidt method

It is an example of an orthogonal basis of the space C of continuous functions.

In the theory of multiple orthogonal series, systems of functions of the form are considered

where is the orthonormal system in L 2[a, b].

Such systems are orthonormal on the m-dimensional cube J m =[a, b]x . .

.x[ a, b] and are complete if the system (j n(x))

Lit.:[l] Kaczmarz S., Shteingauz G., Theory of orthogonal series, trans. from German, M., 1958; Results of science. Mathematical analysis, 1970, M., 1971, p. 109-46; there, s. 147-202; Dub J., Probabilistic processes, trans. from English, M., 1956; Loev M., Theory of Probability, trans. from English, M., 1962; Zygmund A., Trigonometric series, trans. from English, vol. 1-2, M., 1965. A. A. Talalyan.

- - a finite or countable system of functions belonging to the Hilbert space L2 and satisfying the conditions of the function gnaz. weighing O. s. f.,* means complex conjugation...

Physical encyclopedia

- - the group of all linear transformations of the n-dimensional vector space V over the field k, preserving a fixed non-degenerate quadratic form Q on V)=Q for any)...

Mathematical Encyclopedia

- - a matrix over a commutative ring R with unit 1, for which the transposed matrix coincides with the inverse. The determinant of O. m. is equal to +1...

Mathematical Encyclopedia

- - a network in which the tangents at a certain point to lines of different families are orthogonal. Examples of operational systems: asymptotic network on a minimal surface, line curvature network. A.V. Ivanov...

Mathematical Encyclopedia

- - an orthogonal array, OA - a matrix of size kx N, the elements of which are the numbers 1, 2, .....

Mathematical Encyclopedia

- - see Isogonal trajectory...

Mathematical Encyclopedia

- - English: System “generator - motor” Adjustable electric drive, the converting device of which is an electric machine converting unit Source: Terms and definitions in the electric power industry...

Construction dictionary

- - see Projection...

Big Encyclopedic Polytechnic Dictionary

- - the procedure for determining the election results, in which mandates are distributed between the parties that nominated their candidates to the representative body in accordance with the number of votes they received...

Dictionary of legal terms

- - a type of proportional electoral system. The end results resemble a proportional system with panning and preferential voting...

Dictionary of legal terms

- - organs of the human body involved in the process of reproduction...

Medical terms

- - a series of four types of genes that encode polymorphic proteins found on the surface of most nucleated cells...

Medical terms

- - order n Matrix...

- - a special case of parallel projection, when the axis or plane of projections is perpendicular to the direction of projection...

Great Soviet Encyclopedia

- - a system of functions (), n = 1, 2,..., orthogonal with weight ρ on the segment, i.e., such that Examples. Trigonometric system 1, cos nx, sin nx; n = 1, 2,..., - O.s. f. with weight 1 on the segment...

Great Soviet Encyclopedia

- - ORTHOGONAL system of FUNCTIONS - system of functions??n?, n=1, 2,.....

Large encyclopedic dictionary

"ORTHOGONAL SYSTEM" in books

Paragraph XXIV The old system of trench warfare and the modern system of marches

From the book Strategy and Tactics in the Art of War author Zhomini Genrikh VeniaminovichParagraph XXIV The Old System of Positional Warfare and the Modern System of Marches By the system of positions is meant the old method of conducting methodical warfare, with armies sleeping in tents, having supplies at hand, engaged in observing each other; one army

19. The concept of “tax system of the Russian Federation”. The relationship between the concepts “tax system” and “tax system”

From the book Tax Law author Mikidze S G19. The concept of “tax system of the Russian Federation”. The relationship between the concepts of “tax system” and “tax system” The tax system is a set of federal taxes, regional and local taxes established in the Russian Federation. Its structure is enshrined in Art. 13–15 Tax Code of the Russian Federation. In accordance with

From the book How It Really Happened. Reconstruction of true history author Nosovsky Gleb Vladimirovich23. Geocentric system of Ptolemy and heliocentric system of Tycho Brahe (and Copernicus) The system of the world according to Tycho Brahe is shown in Fig. 90. At the center of the world is the Earth, around which the Sun revolves. However, all other planets are already orbiting the Sun. Exactly

23. Geocentric system of Ptolemy and heliocentric system of Tycho Brahe (and Copernicus)

From the author's book23. Geocentric system of Ptolemy and heliocentric system of Tycho Brahe (and Copernicus) The system of the world according to Tycho Brahe is shown in Fig. 90. At the center of the world is the Earth, around which the Sun revolves. However, all other planets are already orbiting the Sun. Exactly

Orthogonal matrix

TSBOrthographic projection

From the book Great Soviet Encyclopedia (OR) by the author TSBOrthogonal function system

From the book Great Soviet Encyclopedia (OR) by the author TSB49. The judicial system and the system of law enforcement agencies according to the “Fundamentals of Legislation of the USSR and Union Republics” 1958

From the book History of State and Law of Russia author Pashkevich Dmitry49. The judicial system and the system of law enforcement agencies according to the “Fundamentals of Legislation of the USSR and Union Republics” of 1958. The Fundamentals of Legislation on the Judicial System established the principles for constructing the judicial system of the USSR, the principles of collegial consideration

The system of objective (positive) law and the system of legislation: the relationship of concepts

From the book Jurisprudence author Mardaliev R. T.The system of objective (positive) law and the system of legislation: the relationship of concepts The system of objective (positive) law is the internal structure of law, dividing it into branches, sub-sectors and institutions in accordance with the subject and method of legal

29. Mandatory management system and the system of local self-government during the period of the estate-representative monarchy

author29. Order management system and the system of local self-government during the period of the estate-representative monarchy Orders are bodies of the centralized management system, which initially developed from individual and temporary government orders issued

86. The judicial system and the system of law enforcement agencies according to the “Fundamentals of Legislation of the USSR and Union Republics” 1958

From the book Cheat Sheet on the History of State and Law of Russia author Dudkina Lyudmila Vladimirovna86. The judicial system and the system of law enforcement agencies according to the “Fundamentals of Legislation of the USSR and Union Republics” 1958 Already since 1948, the procedural legislation of the USSR and the republics has undergone significant changes: 1) people's courts have become elected; 2) courts have become more

31. French government system, suffrage and electoral system

From the book Constitutional Law of Foreign Countries author Imasheva E G31. French government system, suffrage and electoral system In France, there is a mixed (or semi-presidential) republican government. The system of government in France is built on the principle of separation of powers. Modern France

44. French government system, suffrage and electoral system

From the book Constitutional Law of Foreign Countries. Crib author Belousov Mikhail Sergeevich44. The system of government bodies of France, suffrage and electoral system France is a mixed (semi-presidential) republic, the system of government bodies of which is based on the principle of separation of powers. France today is a republic with a strong

Chapter IV. Double head matching system. "Insect" system. Minisystem

From the book Su Jok for everyone by Woo Park JaeChapter IV. Double head matching system. "Insect" system. Minisystem Double system of correspondence to the head On the fingers and toes there are two systems of correspondence to the head: the “human type” system and the “animal type” system. The “human type” system. Border

First emotional center - skeletal system, joints, blood circulation, immune system, skin

From the book Everything will be fine! by Hay LouiseThe first emotional center - skeletal system, joints, blood circulation, immune system, skin. The healthy state of the organs associated with the first emotional center depends on the feeling of safety in this world. If you are deprived of the support of family and friends that you

Definition 1. ) is called orthogonal if all its elements are pairwise orthogonal:

Theorem 1. An orthogonal system of non-zero vectors is linearly independent.

(Assume the system is linearly dependent: and, to be sure, Let us scalarly multiply the equality by . Taking into account the orthogonality of the system, we obtain: }

Definition 2. System of vectors of Euclidean space ( ) is called orthonormal if it is orthogonal and the norm of each element is equal to one.

It immediately follows from Theorem 1 that an orthonormal system of elements is always linearly independent. From here it follows, in turn, that in n– in a dimensional Euclidean space an orthonormal system of n vectors forms a basis (for example, ( i, j, k ) at 3 X– dimensional space). Such a system is called orthonormal basis, and its vectors are basis vectors.

The coordinates of a vector in an orthonormal basis can be easily calculated using the scalar product: if Indeed, multiplying the equality on , we obtain the indicated formula.

In general, all the basic quantities: the scalar product of vectors, the length of a vector, the cosine of the angle between vectors, etc. have the simplest form in an orthonormal basis. Let's consider the scalar product: , since

And all other terms are equal to zero. From here we immediately get:

* Consider an arbitrary basis. The scalar product in this basis will be equal to:

(Here αi And β j – coordinates of vectors in the basis ( f), and are scalar products of basis vectors).

Quantities γ ij form a matrix G, called Gram matrix. The scalar product in matrix form will look like: *

Theorem 2. In any n– in dimensional Euclidean space there is an orthonormal basis. The proof of the theorem is constructive in nature and is called

9. Gram–Schmidt orthogonalization process.

Let ( a 1 ,...,a n ) − arbitrary basis n– dimensional Euclidean space (the existence of such a basis is due to n– dimension of space). The algorithm for constructing an orthonormal based on a given basis is as follows:

1.b 1 =a 1, e 1 = b 1/|b 1|, |e 1|= 1.

2.b 2^e 1, because (e 1 , a 2)- projection a 2 on e 1 , b 2 = a 2 -(e 1 , a 2)e 1 , e 2 = b 2/|b 2|, |e 2|= 1.

3.b 3^a 1, b 3^a 2 , b 3 = a 3 -(e 1 , a 3)e 1 -(e 2 , a 3)e 2 , e 3 = b 3/|b 3|, |e 3|= 1.

.........................................................................................................

k. b k^a 1 ,..., b k^a k-1 , b k = a k - S i=1 k(e i , a k)e i , e k = b k/|b k|, |e k|= 1.

Continuing the process, we obtain an orthonormal basis ( e 1 ,...,e n }.

Note 1. Using the considered algorithm, it is possible to construct an orthonormal basis for any linear shell, for example, an orthonormal basis for the linear shell of a system that has a rank of three and consists of five-dimensional vectors.

Example.x =(3,4,0,1,2), y =(3,0,4,1,2), z =(0,4,3,1,2)

Note 2. Special cases

The Gram-Schmidt process can also be applied to an infinite sequence of linearly independent vectors.

Additionally, the Gram-Schmidt process can be applied to linearly dependent vectors. In this case it issues 0 (zero vector) at step j , If a j is a linear combination of vectors a 1 ,...,a j -1 . If this can happen, then to preserve the orthogonality of the output vectors and to prevent division by zero during orthonormalization, the algorithm must check for null vectors and discard them. The number of vectors produced by the algorithm will be equal to the dimension of the subspace generated by the vectors (i.e., the number of linearly independent vectors that can be distinguished among the original vectors).

10. Geometric vector spaces R1, R2, R3.

Let us emphasize that only spaces have direct geometric meaning

R 1, R 2, R 3. The space R n for n > 3 is an abstract purely mathematical object.

1) Let a system of two vectors be given a And b . If the system is linearly dependent, then one of the vectors, let's say a , is linearly expressed through another:

a= k b.

Two vectors connected by such a dependence, as already mentioned, are called collinear. So, a system of two vectors is linearly dependent if and only

when these vectors are collinear. Note that this conclusion applies not only to R3, but also to any linear space.

2) Let the system in R3 consist of three vectors a, b, c . Linear dependence means that one of the vectors, say a , is linearly expressed through the rest:

A= k b+ l c . (*)

Definition. Three vectors a, b, c in R 3 lying in the same plane or parallel to the same plane are called coplanar

(in the figure on the left the vectors are indicated a, b, c from one plane, and on the right the same vectors are plotted from different origins and are only parallel to one plane).

So, if three vectors in R3 are linearly dependent, then they are coplanar. The converse is also true: if the vectors a, b, c from R3 are coplanar, then they are linearly dependent.

Vector artwork vector a, to vector b in space is called a vector c , satisfying the following requirements:

Designation:

Consider an ordered triple of non-coplanar vectors a, b, c in three-dimensional space. Let us combine the origins of these vectors at the point A(that is, we choose a point arbitrarily in space A and move each vector in parallel so that its origin coincides with the point A). The ends of vectors combined with their beginnings at a point A, do not lie on the same line, since the vectors are non-coplanar.

Ordered triple of non-coplanar vectors a, b, c in three-dimensional space is called right, if from the end of the vector c shortest turn from a vector a to vector b visible to the observer counterclockwise. Conversely, if the shortest turn is seen clockwise, then the triple is called left.

Another definition is related to right hand person (see picture), where the name comes from.

All right-handed (and left-handed) triples of vectors are called identically oriented.