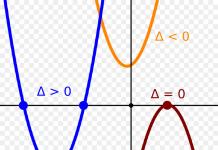

By processing independent measurements of the random variable ξ, we can construct a statistical distribution function F * (x). Based on the form of this function, we can accept the hypothesis that the true theoretical distribution function is F(x). The independent measurements themselves (x 1 , x 2 ,…,x n) forming the sample can be considered as identically distributed random variables with a hypothetical distribution function F(x).

Obviously, there will be some discrepancies between the functions F * (x) and F(x). The question arises whether these discrepancies are a consequence of the limited sample size or are due to the fact that our hypothesis is not correct, i.e. the real distribution function is not F(x), but some other one. To resolve this issue, they use consent criteria, the essence of which is as follows. A certain value Δ(F, F *) is selected, which characterizes the degree of discrepancy between the functions F * (x) and F(x). For example, Δ(F, F *)=Sup|F(x)-F * (x)|, i.e. upper bound in x of the modulus of the difference.

Considering the hypothesis correct, i.e. knowing the distribution function F(x), you can find the distribution law of the random variable Δ(F, F *) (we will not touch on the question of how to do this). Let us set the number p 0 so small that the occurrence of the event (Δ(F, F *)>Δ 0 ) with this probability will be considered practically impossible. From the condition

let's find the value Δ 0. Here f(x) is the distribution density Δ(F,F *).

Let us now calculate the value Δ(F, F *)= Δ 1 based on the results

samples, i.e. Let's find one of the possible values of the random variable Δ(F, F *). If Δ 1 ≥Δ 0, then this means that a practically impossible event has occurred. This can be explained by the fact that our hypothesis is not correct. So, if Δ 1 ≥Δ 0, then the hypothesis is rejected, and if Δ 1<Δ 0 , гипотеза может оказаться неверной, но вероятность этого мала.

Various values can be taken as a measure of the discrepancy Δ(F, F *). Depending on this, different consent criteria are obtained. For example, the Kolmogorov, Mises, Pearson goodness-of-fit test, or the chi-square test.

Let the results of n measurements be presented in the form of a grouped statistical series with k digits.

DISCHARGE (x 0 ,x 1) (in fact, we assume that measurement errors are distributed uniformly over a certain segment). Then the probability of getting into each of the seven categories will be equal. Using the grouped series from §11, we calculate Δ(F, F *)= Δ 1 = using formula (1). In this case.

Since the hypothetical distribution law includes two unknown parameters, α and β – the beginning and end of the segment, the number of degrees of freedom will be 7-1-2=4. Using the chi-square distribution table with the selected probability p 0 =10 -3, we find Δ 0 =18. Because Δ 1 >Δ 0 , then the hypothesis about the uniform distribution of the measurement error will have to be discarded.

Introduction

The relevance of this topic is that during the study of the basics of biostatistics, we assumed that the distribution law of the population was known. But what if the distribution law is unknown, but there is reason to assume that it has a certain form (let’s call it A), then the null hypothesis is tested: the general population is distributed according to law A. This hypothesis is tested using a specially selected random variable - the goodness-of-fit criterion.

Goodness-of-fit criteria are criteria for testing hypotheses about the correspondence of the empirical distribution to the theoretical probability distribution. Such criteria are divided into two classes:

- Ш The general consent criteria apply to the most general formulation hypotheses, namely the hypothesis about the agreement of the observed results with any a priori assumed probability distribution.

- Ш Special goodness-of-fit tests involve special null hypotheses that formulate agreement with a certain form of probability distribution.

Agreement criterion

The most common goodness-of-fit tests are omega-square, chi-square, Kolmogorov, and Kolmogorov-Smirnov.

Nonparametric goodness-of-fit tests Kolmogorov, Smirnov, and omega square are widely used. However, they are also associated with widespread errors in application. statistical methods.

The fact is that the listed criteria were developed to test agreement with a fully known theoretical distribution. Calculation formulas, tables of distributions and critical values are widely used. The main idea of the Kolmogorov, omega square and similar tests is to measure the distance between the empirical distribution function and the theoretical distribution function. These criteria differ in the type of distances in the space of distribution functions.

Pearson goodness-of-fit tests for a simple hypothesis

K. Pearson's theorem applies to independent trials with a finite number of outcomes, i.e. to Bernoulli tests (in a slightly expanded sense). It allows us to judge whether observations across a large number of trials of the frequencies of these outcomes are consistent with their estimated probabilities.

In many practical problems, the exact distribution law is unknown. Therefore, a hypothesis is put forward about the correspondence of the existing empirical law, constructed from observations, to some theoretical one. This hypothesis requires statistical testing, the results of which will either be confirmed or refuted.

Let X be the subject random variable. It is required to test the hypothesis H0 that this random variable obeys the distribution law F(x). To do this, it is necessary to make a sample of n independent observations and use it to construct an empirical distribution law F "(x). To compare the empirical and hypothetical laws, a rule called the goodness-of-fit criterion is used. One of the popular ones is the K. Pearson chi-square goodness-of-fit test. In it The chi-square statistic is calculated:

where N is the number of intervals according to which the empirical distribution law was constructed (the number of columns of the corresponding histogram), i is the number of the interval, pt i is the probability of the random variable value falling into i-th interval for the theoretical distribution law, pe i is the probability of the random variable value falling into the i-th interval for the empirical distribution law. It should obey the chi-square distribution.

If the calculated value of the statistic exceeds the quantile of the chi-square distribution with k-p-1 degrees of freedom for a given significance level, then the hypothesis H0 is rejected. Otherwise, it is accepted at the specified significance level. Here k is the number of observations, p is the number of estimated parameters of the distribution law.

Let's look at the statistics:

The h2 statistic is called the Pearson chi-square statistic for a simple hypothesis.

It is clear that h2 represents the square of a certain distance between two r-dimensional vectors: the vector of relative frequencies (mi /n, ..., mr /n) and the vector of probabilities (pi, ..., pr). This distance differs from the Euclidean distance only in that different coordinates enter it with different weights.

Let us discuss the behavior of statistics h2 in the case when the hypothesis H is true and in the case when H is false. If H is true, then the asymptotic behavior of h2 for n > ? indicates the theorem of K. Pearson. To understand what happens to (2.2) when H is false, note that according to the law of large numbers mi /n > pi for n > ?, for i = 1, …, r. Therefore, for n > ?:

This value is equal to 0. Therefore, if H is incorrect, then h2 >? (for n > ?).

From the above it follows that H should be rejected if the value h2 obtained in the experiment is too large. Here, as always, the words “too large” mean that the observed value of h2 exceeds the critical value, which in this case can be taken from the chi-square distribution tables. In other words, the probability P(ch2 npi h2) is a small value and, therefore, it is unlikely to accidentally obtain the same as in the experiment, or an even greater discrepancy between the frequency vector and the probability vector.

The asymptotic nature of K. Pearson's theorem, which underlies this rule, requires caution when using it. practical use. It can only be relied upon for large n. It is necessary to judge whether n is large enough taking into account the probabilities pi, ..., pr. Therefore, it cannot be said, for example, that one hundred observations will be enough, since not only n should be large, but the products npi , ..., npr (expected frequencies) should not be small either. Therefore, the problem of approximating h2 (continuous distribution) to the h2 statistic, whose distribution is discrete, turned out to be difficult. A combination of theoretical and experimental arguments has led to the belief that this approximation is applicable if all expected frequencies npi>10. if the number r (the number of different outcomes) increases, the limit for is reduced (to 5 or even to 3 if r is of the order of several tens). To meet these requirements, in practice it is sometimes necessary to combine several outcomes, i.e. switch to Bernoulli scheme with smaller r.

The described method for checking agreement can be applied not only to Bernoulli tests, but also to random samples. First, their observations must be turned into Bernoulli tests by grouping. They do it this way: the observation space is divided into a finite number of non-overlapping regions, and then the observed frequency and the hypothetical probability are calculated for each region.

In this case, to the previously listed difficulties of approximation, one more is added - the choice of a reasonable partition of the original space. In this case, care must be taken to ensure that, in general, the rule for testing the hypothesis about the initial distribution of the sample is sufficiently sensitive to possible alternatives. Finally, I note that statistical criteria based on reduction to Bernoulli’s scheme, as a rule, are not consistent against all alternatives. So this method of checking consent is of limited value.

The Kolmogorov-Smirnov goodness-of-fit test in its classical form is more powerful than the h2 criterion and can be used to test the hypothesis about the correspondence of the empirical distribution to any theoretical continuous distribution F(x) with previously known parameters. The latter circumstance imposes restrictions on the possibility of widespread practical application of this criterion when analyzing the results of mechanical tests, since the parameters of the characteristics distribution function mechanical properties, as a rule, are estimated from the data of the sample itself.

The Kolmogorov-Smirnov criterion is used for ungrouped data or for grouped ones in the case of a small interval width (for example, equal to the scale division of a force meter, load cycle counter, etc.). Let the result of testing a series of n samples be a variation series of characteristics of mechanical properties

x1 ? x2 ? ...? xi? ...? xn. (3.93)

It is required to test the null hypothesis that the sampling distribution (3.93) belongs to the theoretical law F(x).

The Kolmogorov-Smirnov criterion is based on the distribution of the maximum deviation of the accumulated particular from the value of the distribution function. When used, statistics are calculated

which is the statistic of the Kolmogorov criterion. If the inequality holds

Dnvn? forehead (3.97)

for large sample sizes (n > 35) or

Dn(vn + 0.12 + 0.11/vn) ? forehead (3.98)

for n? 35, then the null hypothesis is not rejected.

If inequalities (3.97) and (3.98) are not met, an alternative hypothesis is accepted that the sample (3.93) belongs to an unknown distribution.

The critical values of lb are: l0.1 = 1.22; l0.05 = 1.36; l0.01 = 1.63.

If the parameters of the function F(x) are not known in advance, but are estimated from sample data, the Kolmogorov-Smirnov criterion loses its universality and can only be used to check the compliance of experimental data with only certain specific functions distributions.

When used as a null hypothesis that the experimental data belongs to a normal or lognormal distribution, statistics are calculated:

where Ц(zi) is the value of the Laplace function for

Ц(zi) = (xi - xср)/s The Kolmogorov-Smirnov criterion for any sample size n is written in the form

The critical values of lb in this case are: l0.1 = 0.82; l0.05 = 0.89; l0.01 = 1.04.

If the hypothesis is tested that the sample corresponds to the *** exponential distribution, the parameter of which is estimated from experimental data, similar statistics are calculated:

criterion empirical probability

and form the Kolmogorov-Smirnov criterion.

Critical values of lb for this case: l0.1 = 0.99; l0.05 = 1.09; l0.01 = 1.31.

MINISTRY OF EDUCATION AND SCIENCE OF UKRAINE

AZOV REGIONAL INSTITUTE OF MANAGEMENT

ZAPORIZHIE NATIONAL TECHNICAL UNIVERSITY

Department of Mathematics

COURSE WORK

3 disciplines "STATISTICS"

On the topic: “CRITERIA OF CONSENT”

2nd year students

Group 207 Faculty of Management

Batura Tatyana Olegovna

Scientific supervisor

Associate Professor Kosenkov O.I.

Berdyansk - 2009

INTRODUCTION

1.2 Pearson χ 2 goodness-of-fit tests for a simple hypothesis

1.3 Goodness of fit criteria for a complex hypothesis

1.4 Fisher's χ 2 goodness-of-fit tests for a complex hypothesis

1.5 Other consent criteria. Goodness-of-fit tests for the Poisson distribution

SECTION II. PRACTICAL APPLICATION OF THE AGREEMENT CRITERION

APPLICATIONS

LIST OF REFERENCES USED

INTRODUCTION

This course work describes the most common goodness-of-fit tests - omega-square, chi-square, Kolmogorov and Kolmogorov-Smirnov. Particular attention is paid to the case when it is necessary to check whether the data distribution belongs to a certain parametric family, for example, normal. Due to its complexity, this situation, which is very common in practice, has not been fully studied and is not fully reflected in educational and reference literature.

Goodness-of-fit criteria are statistical criteria designed to test the agreement between experimental data and a theoretical model. This question is best developed if the observations represent a random sample. The theoretical model in this case describes the distribution law.

The theoretical distribution is the probability distribution that governs random selection. Not only theory can give ideas about it. The sources of knowledge here can be tradition, past experience, and previous observations. We just need to emphasize that this distribution should be chosen regardless of the data against which we are going to check it. In other words, it is unacceptable to first “fit” a certain distribution law using a sample, and then try to check agreement with the obtained law using the same sample.

Simple and complex hypotheses. Speaking about the theoretical distribution law, which the elements of a given sample should hypothetically follow, we must distinguish between simple and complex hypotheses about this law:

· a simple hypothesis directly indicates a certain probability law (probability distribution) according to which the sample values arose;

· a complex hypothesis indicates a single distribution, but some set of them (for example, a parametric family).

Goodness-of-fit criteria are based on the use of various measures of distances between the analyzed empirical distribution and the distribution function of the characteristic in the population.

Nonparametric goodness-of-fit tests Kolmogorov, Smirnov, and omega square are widely used. However, they are also associated with widespread errors in the application of statistical methods.

The fact is that the listed criteria were developed to test agreement with a fully known theoretical distribution. Calculation formulas, tables of distributions and critical values are widely used. The main idea of the Kolmogorov, omega square and similar tests is to measure the distance between the empirical distribution function and the theoretical distribution function. These criteria differ in the type of distances in the space of distribution functions.

Getting started with this course work, I set myself a goal to find out what consent criteria exist, to figure out why they are needed. To achieve this goal, you must complete the following tasks:

1. Reveal the essence of the concept of “consent criteria”;

2. Determine what consent criteria exist and study them separately;

3. Draw conclusions on the work done.

SECTION I. THEORETICAL BACKGROUND OF THE CONSENT CRITERION

1.1 Kolmogorov goodness-of-fit tests and omega-square in the case of a simple hypothesis

A simple hypothesis. Let's consider a situation where the measured data are numbers, in other words, one-dimensional random variables. The distribution of one-dimensional random variables can be fully described by specifying their distribution functions. And many goodness-of-fit tests are based on checking the closeness of theoretical and empirical (sample) distribution functions.

Suppose we have a sample of n. Let us denote the true distribution function to which the observations are subject, G(x), the empirical (sample) distribution function, Fn(x), and the hypothetical distribution function, F(x). Then the hypothesis H that the true distribution function is F(x) is written in the form H: G(·) = F(·).

How to test hypothesis H? If H is true, then F n and F should exhibit a certain similarity, and the difference between them should decrease as n increases. Due to Bernoulli's theorem, F n (x) → F(x) as n → ∞. To quantitatively express the similarity of the functions F n and F, use various ways.

To express the similarity of functions, one or another distance between these functions can be used. For example, you can compare F n and F in the uniform metric, i.e. consider the value:

(1.1)The D n statistic is called the Kolmogorov statistic.

Obviously, D n is a random variable, since its value depends on the random object F n. If the hypothesis H 0 is true and n → ∞, then F n (x) → F(x) for any x. Therefore, it is natural that under these conditions D n → 0. If the hypothesis H 0 is false, then F n → G and G ≠ F, and therefore sup -∞ As always when testing a hypothesis, we reason as if the hypothesis were true. It is clear that H 0 should be rejected if the experimentally obtained value of the statistic D n seems implausibly large. But to do this, you need to know how the statistics D n are distributed under hypothesis H: F = G for given n and G. A remarkable property of D n is that if G = F, i.e. if the hypothetical distribution is specified correctly, then the distribution law of the statistic D n turns out to be the same for all continuous functions G. It depends only on the sample size n. The proof of this fact is based on the fact that statistics do not change their value under monotonic transformations of the x-axis. With this transformation, any continuous distribution G can be turned into a uniform distribution on the interval. In this case, F n (x) will turn into the distribution function of the sample from this uniform distribution. For small n, tables of percentage points have been compiled for statistics D n under the hypothesis H 0. For large n, the distribution of D n (under the hypothesis H 0) is indicated by the limit theorem found in 1933 by A.N. Kolmogorov. She talks about statistics This amount is very easy to calculate in Maple. To test a simple hypothesis H 0: G = F, it is necessary to calculate the value of the statistics D n from the original sample. A simple formula works for this: Here, x k are the elements of the variation series constructed from the original sample. The resulting value of Dn must then be compared with the critical values extracted from tables or calculated using an asymptotic formula. Hypothesis H 0 must be rejected (at the selected significance level) if the experimentally obtained value of D n exceeds the selected critical value corresponding to the accepted significance level. We obtain another popular criterion of agreement by measuring the distance between F n and F in the integral metric. It is based on the so-called omega-squared statistic: To calculate it using real data, you can use the formula: If the hypothesis H 0 is true and the function G is continuous, the distribution of the omega-square statistic, just like the distribution of the D n statistic, depends only on n and does not depend on G. Same as for D n, for When analyzing variation distribution series, it is of great importance how empirical distribution sign corresponds normal. To do this, the frequencies of the actual distribution must be compared with the theoretical ones, which are characteristic of a normal distribution. This means that, based on actual data, it is necessary to calculate the theoretical frequencies of the normal distribution curve, which are a function of normalized deviations. In other words, the empirical distribution curve needs to be aligned with the normal distribution curve. Objective characteristics of compliance theoretical And empirical frequencies can be obtained using special statistical indicators called consent criteria.

Agreement criterion called a criterion that allows you to determine whether the discrepancy is empirical And theoretical distributions are random or significant, i.e. whether the observational data agree with the put forward statistical hypothesis or do not agree. The distribution of the population, which it has due to the hypothesis put forward, is called theoretical. There is a need to install criterion(rule) that would allow one to judge whether the discrepancy between the empirical and theoretical distributions is random or significant. If the discrepancy turns out to be random, then they believe that the observational data (sample) are consistent with the hypothesis put forward about the law of distribution of the general population and, therefore, the hypothesis is accepted; if the discrepancy turns out to be significant, then the observational data do not agree with the hypothesis and it is rejected. Typically, empirical and theoretical frequencies differ because: Thus, consent criteria make it possible to reject or confirm the correctness of the hypothesis put forward when aligning the series about the nature of the distribution in the empirical series. Empirical frequencies obtained as a result of observation. Theoretical frequencies calculated using formulas. For normal distribution law they can be found as follows: There are several goodness-of-fit tests, the most common of which are: chi-square test (Pearson), Kolmogorov test, Romanovsky test. Pearson's goodness-of-fit test χ 2– one of the main ones, which can be represented as the sum of the ratios of the squares of the differences between theoretical (f T) and empirical (f) frequencies to theoretical frequencies: For the χ 2 distribution, tables have been compiled that indicate the critical value of the χ 2 goodness-of-fit criterion for the selected significance level α and degrees of freedom df (or ν). α=0.10, then P=0.90 (in 10 cases out of 100) α=0.05, then P=0.95 (in 5 cases out of 100) α=0.01, then P=0.99 (in 1 case out of 100) the correct hypothesis can be rejected The number of degrees of freedom df is defined as the number of groups in the distribution series minus the number of connections: df = k –z. The number of connections is understood as the number of indicators of the empirical series used in calculating theoretical frequencies, i.e. indicators connecting empirical and theoretical frequencies.For example, when aligned with a bell curve, there are three relationships.Therefore, when aligned bybell curvethe number of degrees of freedom is defined as df =k–3.To assess significance, the calculated value is compared with the table χ 2 tables With complete coincidence of the theoretical and empirical distributions χ 2 =0, otherwise χ 2 >0. If χ 2 calc > χ 2 tab , then for a given level of significance and number of degrees of freedom, we reject the hypothesis about the insignificance (randomness) of the discrepancies. If χ 2 calculated< χ

2 табл то

We accept the hypothesis and with probability P = (1-α) we can claim that the discrepancy between theoretical and empirical frequencies is random. Therefore, there is reason to assert that the empirical distribution obeys normal distribution.

Pearson's goodness-of-fit test is used if the population size is large enough (N>50), and the frequency of each group must be at least 5. Based on determining the maximum discrepancy between the accumulated empirical and theoretical frequencies: where D and d are, respectively, the maximum difference between the accumulated frequencies and the accumulated frequencies of the empirical and theoretical distributions. Let us consider how the Kolmogorov criterion (λ) is applied when testing the hypothesis of normal distribution general population.Aligning the actual distribution with the bell curve consists of several steps: ForIVtable columns:

In MS Excel, the normalized deviation (t) is calculated using the NORMALIZATION function. It is necessary to select a range of free cells by the number of options (spreadsheet rows). Without removing the selection, call the NORMALIZE function. In the dialog box that appears, indicate the following cells, which contain, respectively, the observed values (X i), average (X) and standard deviation Ϭ. The operation must be completed simultaneous by pressing Ctrl+Shift+Enter ForVtable columns:

The probability density function of the normal distribution φ(t) is found from the table of values of the local Laplace function for the corresponding value of the normalized deviation (t) ForVItable columns:

To test the hypothesis about the correspondence of the empirical distribution to the theoretical distribution law, special statistical indicators are used - goodness-of-fit criteria (or compliance criteria). These include the criteria of Pearson, Kolmogorov, Romanovsky, Yastremsky, etc. Most agreement criteria are based on the use of deviations of empirical frequencies from theoretical ones. Obviously, the smaller these deviations, the better the theoretical distribution corresponds to the empirical one (or describes it). Consent criteria- these are criteria for testing hypotheses about the correspondence of the empirical distribution to the theoretical probability distribution. Such criteria are divided into two classes: general and special. General goodness-of-fit tests apply to the most general formulation of a hypothesis, namely, the hypothesis that observed results agree with any a priori assumed probability distribution. Special goodness-of-fit tests involve special null hypotheses that state agreement with a particular form of probability distribution. Agreement criteria, based on the established distribution law, make it possible to establish when discrepancies between theoretical and empirical frequencies should be considered insignificant (random), and when - significant (non-random). It follows from this that the agreement criteria make it possible to reject or confirm the correctness of the hypothesis put forward when aligning the series about the nature of the distribution in the empirical series and to answer whether it is possible to accept for a given empirical distribution a model expressed by some theoretical law distributions. Pearson goodness-of-fit test c 2 (chi-square) is one of the main criteria for agreement. Proposed by the English mathematician Karl Pearson (1857-1936) to assess the randomness (significance) of discrepancies between the frequencies of empirical and theoretical distributions: The scheme for applying criterion c 2 to assessing the consistency of theoretical and empirical distributions comes down to the following: 1. The calculated measure of discrepancy is determined. 2. The number of degrees of freedom is determined. 3. Based on the number of degrees of freedom n, using a special table, is determined. 4. If , then for a given significance level α and the number of degrees of freedom n, the hypothesis about the insignificance (randomness) of the discrepancies is rejected. Otherwise, the hypothesis can be recognized as not contradicting the experimental data obtained and with probability (1 – α) it can be argued that the discrepancies between theoretical and empirical frequencies are random. Significance level is the probability of erroneously rejecting the put forward hypothesis, i.e. the probability that a correct hypothesis will be rejected. In statistical studies, depending on the importance and responsibility of the problems being solved, the following three levels of significance are used: 1) a = 0.1, then R = 0,9; 2) a = 0.05, then R = 0,95; 3) a = 0.01, then R = 0,99. Using the agreement criterion c 2, the following conditions must be met: 1. The volume of the population under study must be large enough ( N≥ 50), while the frequency or group size must be at least 5. If this condition is violated, it is necessary to first combine small frequencies (less than 5). 2. The empirical distribution must consist of data obtained as a result of random sampling, i.e. they must be independent. The disadvantage of the Pearson goodness-of-fit criterion is the loss of some of the original information associated with the need to group observation results into intervals and combine individual intervals with a small number of observations. In this regard, it is recommended to supplement the check of distribution compliance according to the criterion with 2 other criteria. This is especially necessary with a relatively small sample size ( n ≈ 100). In statistics Kolmogorov goodness-of-fit test(also known as the Kolmogorov-Smirnov goodness-of-fit test) is used to determine whether two empirical distributions obey the same law, or to determine whether a resulting distribution obeys an assumed model. The Kolmogorov criterion is based on determining the maximum discrepancy between accumulated frequencies or frequencies of empirical or theoretical distributions. The Kolmogorov criterion is calculated using the following formulas: Where D And d- accordingly, the maximum difference between the accumulated frequencies ( f – f¢) and between accumulated frequencies ( p – p¢) empirical and theoretical series of distributions; N- the number of units in the aggregate. Having calculated the value of λ, according to special table the probability with which it can be stated that deviations of empirical frequencies from theoretical ones are random is determined. If the sign takes values up to 0.3, then this means that there is a complete coincidence of frequencies. With a large number of observations, the Kolmogorov test is able to detect any deviation from the hypothesis. This means that any difference in the sample distribution from the theoretical one will be detected with its help if there are a sufficiently large number of observations. Practical significance this property is not essential, since in most cases it is difficult to count on receiving large number observations under constant conditions, the theoretical idea of the distribution law to which the sample should obey is always approximate, and the accuracy of statistical tests should not exceed the accuracy of the selected model. Romanovsky's goodness-of-fit test is based on the use of the Pearson criterion, i.e. already found values of c 2, and the number of degrees of freedom: where n is the number of degrees of freedom of variation. The Romanovsky criterion is convenient in the absence of tables for . If< 3, то расхождения распределений случайны, если же >3, then they are not random and the theoretical distribution cannot serve as a model for the empirical distribution being studied. B. S. Yastremsky used in the criterion of agreement not the number of degrees of freedom, but the number of groups ( k), a special value of q, depending on the number of groups, and a chi-square value. Yastremski's goodness-of-fit test has the same meaning as the Romanovsky criterion and is expressed by the formula where c 2 is Pearson's goodness-of-fit criterion; - number of groups; q - coefficient, for the number of groups less than 20, equal to 0.6. If L fact > 3, the discrepancies between theoretical and empirical distributions are not random, i.e. the empirical distribution does not meet the requirements of a normal distribution. If L fact< 3, расхождения между эмпирическим и теоретическим распределениями считаются случайными.

(1.2)

The significance level α is the probability of erroneously rejecting the proposed hypothesis, i.e. the probability that a correct hypothesis will be rejected. R - statistical significance accepting the correct hypothesis. In statistics, three levels of significance are most often used:![]()

Using the distribution table of the Kolmogorov statistics, the probability is determined, which can vary from 0 to 1. When P(λ) = 1, there is a complete coincidence of frequencies, P(λ) = 0 - a complete discrepancy. If the probability value P is significant in relation to the found value λ, then we can assume that the discrepancies between the theoretical and empirical distributions are insignificant, that is, they are random.

The main condition for using the Kolmogorov criterion is a sufficiently large number of observations.Kolmogorov goodness-of-fit test