Statement of the problem

Root separation

Root refinement

1.2.3.2. Iteration method

1.2.3.4. Chord method

Statement of the problem

Algebraic equations

( 1.2.1-1)

transcendental equation

(1.2.1-2)

Iterative refinement of roots.

At the stage of root separation, the problem of finding the narrowest possible segments is solved, which contain one and only one root of the equation.

The root refinement stage aims to calculate the approximate value of the root with a given accuracy. In this case, iterative methods are used for calculating successive approximations to the root: x 0, x 1, ..., x n, ..., in which each subsequent approximation x n+1 is calculated based on the previous x n. Each step is called an iteration. If the sequence x 0 , x 1 , ..., x n , …as n ® ¥ has a limit equal to the value of the root , then the iterative process is said to converge.

There are various ways to separate and refine roots, which we will look at below.

Root separation

The root of the equation f(x)=0 is considered separated (localized) on the segment if this equation has no other roots on this segment. To separate the roots of the equation, it is necessary to divide the range of permissible values of the function f(x) into fairly narrow segments, each of which contains only one root. There are graphic And analytical methods for separating roots.

Root refinement

The task of refining the root of the equation with an accuracy separated on the segment consists of finding such an approximate value of the root for which the inequality is true . If the equation has not one, but several roots, then the refinement stage is carried out for each separated root.

Half division method

Let the root of the equation f(x)=0 be separated on the segment, that is, there is a single root on this segment, and the function on this segment is continuous.

The half division method allows us to obtain a sequence of segments nested within each other , , …,,…, , such that f(a i).f(b i)< 0 , where i=1,2,…,n, and the length of each subsequent segment is half the length of the previous one:

Consecutive narrowing of the segment around unknown value root ensures execution at some step n inequalities |b n - a n |< e. Поскольку при этом для любого хÎ будет выполняться неравенство | - х| <, то с точностью любое

Can be taken as an approximate value of the root, for example its midpoint

In the half division method, from iteration to iteration, the length of the initial segment is sequentially reduced by half (Fig. 1.2.3-1). Therefore, at the nth step the following estimate of the error of the result is valid:

![]() ( 1.2.3-1)

( 1.2.3-1)

where is the exact value of the root, x n О is the approximate value of the root at the nth step.

By comparing the resulting error estimate with the given accuracy, we can estimate the required number of steps:

![]() (1.2.3-2)

(1.2.3-2)

From the formula it is clear that a decrease in the value e(increased accuracy) leads to a significant increase in the amount of calculations, therefore, in practice, the method of halves is used to find the root relatively roughly, and its further refinement is carried out using other, more effective methods.

|

Rice. 1.2.3-2. Scheme of the halves method algorithm

The algorithm diagram of the halves division method is shown in Fig. 1.2.3-2. In the above algorithm, it is assumed that the left side of the equation f(x) is written in the form of a software module.

Example 1.2.3-1. Refine the root of the equation x 3 +x-1=0 with an accuracy of =0.1, which is localized on the segment .

It is convenient to present the results using Table 1.2.3-3.

Table 1.2.3-3

| k | a | b | f(a) | f(b) | (a+b)/2 | f((a+b)/2) | a k | b k |

| -1 | 0.5 | -0.375 | 0.5 | |||||

| 0.5 | -0.375 | 0.75 | 0.172 | 0.5 | 0.75 | |||

| 0.5 | 0.75 | -0.375 | 0.172 | 0.625 | -0.131 | 0.625 | 0.75 | |

| 0.625 | 0.75 | -0.131 | 0.172 | 0.688 | 0.0136 | 0.625 | 0.688 |

After the fourth iteration, the length of the segment |b 4 -a 4 | = |0.688-0.625| = 0.063 became less than the value e therefore, the value of the middle of this segment can be taken as an approximate value of the root: x = (a 4 +b 4)/2 = 0.656 .

The value of the function f(x) at point x = 0.656 is equal to f(0.656) = -0.062 .

Iteration method

The iteration method involves replacing the equation f(x)=0 with an equivalent equation x=j(x). If the root of the equation is separated on the segment , then based on the initial approximation x 0 О, one can obtain a sequence of approximations to the root

x 1 = j(x 0), x 2 = j(x 1), …, , ( 1.2.3-3)

where the function j(x) is called an iterating function.

The convergence condition for the simple iteration method is determined by the following theorem.

Let the root X* equations x=j(x) separated by a segmentand a sequence of approximations was constructed according to the rule x n =j(x n -1) .

Then, if all members of the sequence x n =j(x n -1) О and there is such a thing q(0

Thus, if the convergence condition of the iteration method is satisfied, then the sequence x 0 , x 1 , x 2 , ..., x n , ... obtained using the formula x n +1 = j(x n ), converges to the exact value of the root:

The condition j(x)О for xО means that all approximations x 1 , x 2 , …, x n , … obtained by the iterative formula must belong to the segment on which the root is separated.

To estimate the error of the iteration method, the following condition holds true:

Per number q can take the largest value |j"(x)| , and the iteration process should continue until the inequality is satisfied

![]() (1.2.3-5)

(1.2.3-5)

In practice, a simplified error estimation formula is often used. For example, if 0 |x n -1 - x n | £. Using the iterative formula x n +1 = j(x n) allows you to obtain the value of the root of the equation f(x)=0 with any degree of accuracy .

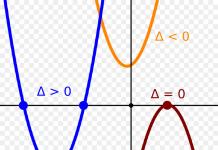

Geometric illustration of the iteration method. Let us construct graphs of the functions y=x and y=j(x) on the X0Y plane ).

The root of the equation x=j(x) is the abscissa of the intersection point of the graphs of the function y = j(x )

and straight line y=x. Let's take some initial approximation x 0 О . On the curve y = j(x) it corresponds to point A 0 = j(x 0). To find the next approximation, draw a straight horizontal line through point A 0 to the intersection with the straight line y = x (point B 1) and lower the perpendicular to the intersection with the curve (point A 1), that is, x 1 = j (x 0) .

Continuing the construction in a similar way, we have a broken line A 0, B 1, A 1, B 2, A 2 ..., for which the common abscissas of the points represent a successive approximation x 1, x 2, ..., x n (“staircase”) to the root X*. From Fig. 1.2.3-3a it is clear that the process converges to the root of the equation. Let us now consider another type of curve y = j(x) (Fig. 1.2.6b). In this case, the broken line A 0, B 1, A 1, B 2, A 2 ... looks like a “spiral”. However, in this case, convergence is observed. It is easy to see that in the first case the condition 0 is satisfied for the derivative< j’(x)< 1, а во втором случае производная j’(x)<0иj’(x)>-1. Thus, it is obvious that if |j’(x)|<1, то процесс итераций сходится к корню. Now consider the cases when |j’(x) |> 1. In Fig. 1.2.3-4a shows the case when j’(x)>1, and in Fig. 1.2.3-4b – when j’(x)< -1. В обоих случаях процесс итерации расходится, то есть, полученное на очередной итерации значение х все дальше удаляется от истинного значения корня. Ways to improve the convergence of the iteration process. Let's consider two options for representing the function j(x) when moving from the equation f(x) to x=j(x). 1.

Let the function j(x) be differentiable and monotonic in the vicinity of the root and there be a number k £ |j‘(x)|, where k ³ 1 (i.e. the process diverges). Let us replace the equation x=j(x) with the equivalent equation x=Y(x )

, Where Y(x) = 1/j(x)(let's move on to the inverse function). Then 2.

Let's represent the function j(x) as j(x) = x - lf(x), where l is the coefficient ,

not equal zero. In order for the process to converge, it is necessary that and the process will be convergent, the recurrent formula has the form If f¢(x)< 0, то в рекуррентной формуле f(x) следует умножить на -1

. The parameter λ can also be determined by the rule: If, then, and if, then, where The algorithm diagram of the iteration method is shown in Fig. 1.2.3-5. The original equation f(x)=0 has been transformed to a form convenient for iterations: The left side of the original equation f(x) and the iterating function fi(x) in the algorithm are designed as separate software modules. Rice. 1.2.3-5. Algorithm diagram of the iteration method Example 1.2.3-2. Refine the root of the equation 5x – 8∙ln(x) – 8 =0 with an accuracy of 0.1, which is localized on the segment . Let us reduce the equation to a form convenient for iterations: Consequently, we take the value x 3 =3.6892 as the approximate value of the root of the equation, which ensures the required accuracy of calculations. At this point f(x 3)=0.0027. Chord method Geometric interpretation of the chord method is as follows Let's draw a line segment through points A and B. The next approximation x 1 is the abscissa of the point of intersection of the chord with the 0x axis. Let's construct the equation of a straight line segment: Let's put y = 0 and find the value x = x 1 (another approximation): Let's repeat the calculation process to obtain the next approximation to the root - x 2 :

In our case (Fig. 1.2.11) the calculation formula of the chord method will have the form This formula is valid when point b is taken as a fixed point, and point a acts as an initial approximation. Let's consider another case (Fig. 1.2.3-9), when . The straight line equation for this case has the form Next approximation x 1 at y = 0 Then the recurrent formula of the chord method for this case has the form It should be noted that the fixed point in the chord method is chosen to be the end of the segment for which the condition f (x)∙ f¢¢ (x)>0 is satisfied. Thus, if point a is taken as a fixed point ,

then x 0 = b acts as the initial approximation, and vice versa. The sufficient conditions that ensure the calculation of the root of the equation f(x) = 0 using the chord formula will be the same as for the tangent method (Newton's method), only instead of the initial approximation, a fixed point is chosen. The chord method is a modification of Newton's method. The difference is that the next approximation in Newton's method is the point of intersection of the tangent with the 0X axis, and in the chord method - the point of intersection of the chord with the 0X axis - the approximations converge to the root from different sides. The error estimate for the chord method is given by the expression Condition for ending the iteration process using the chord method In case M 1<2m 1 , то для оценки погрешности метода может быть использована формула | x n - x n -1 | £ e. Example 1.2.3-4. Clarify the root of the equation e x – 3x = 0, separated on the segment with an accuracy of 10 -4. Let's check the convergence condition: Consequently, a=0 should be chosen as the fixed point, and x 0 =1 should be taken as the initial approximation, since f(0)=1>0 and f(0)*f"(0)>0. Calculation results obtained using the formula Table 1.2.3-4 Rice. 1.2.3-10. Scheme of the chord method algorithm The nonlinear equation is 1)

algebraic or transcendental equation 3)

trigonometric equation 4)

transcendental equation Topic 1.2. Methods for solving nonlinear equations Statement of the problem Root separation 1.2.2.1. Graphic separation of roots 1.2.2.2. Analytical root separation Root refinement 1.2.3.1. Half division method 1.2.3.2. Iteration method 1.2.3.3. Newton's method (tangent method) 1.2.3.4. Chord method 1.2.3.5. Comparison of methods for solving nonlinear equations 1.2.4. Test tasks on the topic “Methods for solving nonlinear equations” Statement of the problem One of the most important and most common tasks mathematical analysis is the task of determining the roots of an equation with one unknown, which in general view can be represented as f(x) = 0. Depending on the type of function f(x), algebraic and transcendental equations are distinguished. Algebraic equations are called equations in which the value of the function f(x) is a polynomial nth degree: f(x) = P(x) = a n x n + a 2 x 2 + …+ a 1 x + a 0 = 0. ( 1.2.1-1) Any non-algebraic equation is called transcendental equation. The function f(x) in such equations is at least one of the following functions: exponential, logarithmic, trigonometric, or inverse trigonometric. The solution to the equation f(x)=0 is the set of roots, that is, those values of the independent variable at which the equation becomes an identity. However, exact values of the roots can be found analytically only for certain types of equations. In particular, formulas expressing the solution of an algebraic equation can be obtained only for equations of no higher than the fourth degree. There are even fewer possibilities for obtaining an exact solution of transcendental equations. It should be noted that the problem of finding the exact values of the roots is not always correct. Thus, if the coefficients of the equation are approximate numbers, the accuracy of the calculated values of the roots certainly cannot exceed the accuracy of the original data. These circumstances force us to consider the possibility of finding the roots of the equation with limited accuracy (approximate roots). The problem of finding the root of an equation with a given accuracy (>0) is considered solved if an approximate value is calculated that differs from the exact value of the root by no more than the value e (1.2.1-2) The process of finding the approximate root of an equation consists of two stages: 1) separation of roots (localization of roots); Mathematics as a science arose in connection with the need to solve practical problems: field measurements, navigation, etc. Because of this, mathematics was numerical mathematics and its goal was to obtain a solution in the form of a number. The numerical solution of applied problems has always interested mathematicians. The largest representatives of the past combined in their research the study of natural phenomena, obtaining their mathematical description, i.e. his mathematical model and his research. The analysis of complicated models required the creation of special, usually numerical, methods for solving problems. The names of some of these methods indicate that the greatest scientists of their time were involved in their development. These are the methods of Newton, Euler, Lobachevsky, Gauss, Chebyshev, Hermite. The present time is characterized by a sharp expansion of applications of mathematics, largely related to the creation and development of means computer technology. As a result of the advent of computers, in less than 40 years, the speed of operations has increased from 0.1 operations per second with manual calculation to 10 operations per second on modern computers. The widespread opinion about the omnipotence of modern computers gives rise to the impression that mathematicians have gotten rid of all the hassle associated with the numerical solution of problems, and the development of new methods for solving them is no longer so important. In reality, the situation is different, since the needs of evolution, as a rule, pose tasks for science that are on the verge of its capabilities. The expansion of the possibilities of applying mathematics led to the mathematization of various branches of science: chemistry, economics, biology, geology, geography, psychology, medicine, technology, etc. Two circumstances can be identified that initially determined the desire for mathematization of sciences: firstly, only the use of mathematical methods makes it possible to give a quantitative character to the study of one or another phenomenon of the material world; secondly, and this is the main thing, only mathematical method thinking makes an object. This research method is called a computational experiment, a completely objective study. Recently, another factor has emerged that has a strong impact on the processes of mathematization of knowledge. This rapid development computer facilities. The use of computers for solving scientific, engineering and generally applied problems is entirely based on their mathematization. Modern technology for studying complex problems is based on the construction and analysis, usually with the help of a computer, of mathematical models of what is being studied. Typically, a computational experiment, as we have already seen, consists of a number of stages: statement of the problem, construction of a mathematical model (mathematical formulation of the problem), development of a numerical method, development of an algorithm for implementing the numerical method, development of a program, debugging of the program, carrying out calculations, analyzing the results. So, the use of a computer to solve any scientific or engineering problem is inevitably associated with the transition from a real process or phenomenon to its mathematical model. Thus, the use of models in scientific research and engineering practice there is the art of mathematical modeling. A model is usually called a imaginable or materially realizable system that reproduces the main, most essential features of a given phenomenon. The main requirements for a mathematical model are adequacy to the phenomenon under consideration, i.e. it should sufficiently reflect characteristic features phenomena. At the same time, it should be relatively simple and accessible to research. The mathematical model reflects the relationship between the conditions for the occurrence of the phenomenon being studied and its results in certain mathematical structures. Most often, the following mathematical concepts are used as such constructions: function, functional, operator, numerical equation, ordinary differential equation, partial differential equation. Mathematical models can be classified according to different criteria: static and dynamic, concentrated and distributed; deterministic and probabilistic. Consider the problem of finding the roots nonlinear equation The roots of equation (1) are those values of x that, when substituted, turn it into an identity. Only for the simplest equations it is possible to find a solution in the form of formulas, i.e. analytical form. More often it is necessary to solve equations using approximate methods, the most widespread among which, due to the advent of computers, are numerical methods. The algorithm for finding roots using approximate methods can be divided into two stages. At the first stage, the location of the roots is studied and their separation is carried out. The region is found in which the root of the equation or the initial approximation to the root x 0 exists. The simplest way The solution to this problem is to study the graph of the function f(x). In the general case, to solve it it is necessary to use all the means of mathematical analysis. The existence of at least one root of equation (1) on the found segment follows from Bolzano’s condition: f(a)*f(b)<0 (2) This implies that the function f(x) is continuous on this interval. However, this condition does not answer the question about the number of roots of the equation on a given interval. If the requirement of continuity of a function is supplemented with the requirement of its monotonicity, and this follows from the constancy of sign of the first derivative, then we can assert the existence of a single root on a given segment. When localizing roots, it is also important to know the basic properties of this type of equation. For example, let us recall some properties of algebraic equations: where are the real coefficients. At the second stage of solving equation (1), using the obtained initial approximation, an iterative process is constructed that makes it possible to refine the value of the root with a certain predetermined accuracy. The iterative process consists of sequential refinement of the initial approximation. Each such step is called an iteration. As a result of the iteration process, a sequence of approximate values of the roots of the equation is found. If this sequence approaches the true value of the root x as n grows, then the iterative process converges. An iterative process is said to converge to at least order m if the following condition is met: where C>0 is some constant. If m=1, then we speak of first-order convergence; m=2 - about quadratic, m=3 - about cubic convergence. Iterative cycles end if, for a given permissible error, the criteria for absolute or relative deviations are met: or a small discrepancy: This work is devoted to the study of an algorithm for solving nonlinear equations using Newton's method. There are many different methods for solving nonlinear equations, some of them are presented below: x i+1 =x i -F(x i) F"(x i). Condition for the convergence of the tangent method F(x 0) F""(x)>0, etc. 3). Dichotomy method. The solution technique comes down to gradually dividing the initial uncertainty interval in half according to the formula C k = a k + b k /2. In order to select the required one from the two resulting segments, it is necessary to find the value of the function at the ends of the resulting segments and consider the one on which the function will change its sign, that is, the condition f (a k) * f (in k) must be satisfied<0. The process of dividing the segment is carried out until the length of the current uncertainty interval is less than the specified accuracy, that is, in k - a k< E. Тогда в качестве приближенного решения уравнения будет точка, соответствующая середине интервала неопределённости. 4). Chord method. The idea of the method is that a chord is constructed on the segment, subtending the ends of the arc of the graph of the function y=f(x), and the point c, the intersection of the chord with the x-axis, is considered an approximate value of the root c = a - (f(a)Х (a-b)) / (f(a) - f(b)), c = b - (f(b)Х (a-b)) / (f(a) - f(b)). The next approximation is sought on the interval or depending on the signs of the function values at points a, b, c x* O, if f(c)H f(a) > 0; x* O if f(c)Х f(b)< 0 . If f"(x) does not change sign to , then denoting c=x 1 and considering a or b as the initial approximation, we obtain iterative formulas of the chord method with a fixed right or left point. x 0 =a, x i+1 = x i - f(x i)(b-x i) / (f(b)-f(x i), with f "(x)Х f "(x) > 0; x 0 =b, x i+1 = x i - f(x i)(x i -a) / (f(x i)-f(a), with f "(x)Х f "(x)< 0 . Convergence of the chord method is linear Algebraic and transcendental equations. Root localization methods. The most general form of a nonlinear equation: f(x)=0 (2.1) where is the function f(x) defined and continuous on a finite or infinite interval [a, b]. Definition 2.1.

Any number that inverts a function f(x) to zero is called the root of equation (2.1). Definition 2.2.

A number is called the kth root of multiplicity if, together with the function f(x) its derivatives up to (k-1)th order inclusive are equal to zero: Definition 2.3.

A single root is called simple. Nonlinear equations with one variable are divided into algebraic and transcendental. Definition 2.4

.

Equation (2.1) is called algebraic if the function F(x) is algebraic. By means of algebraic transformations, from any algebraic equation one can obtain an equation in canonical form: where are the real coefficients of the equation, x is the unknown. From algebra it is known that every algebraic equation has at least one real or two complex conjugate roots. Definition 2.5.

Equation (2.1) is called transcendental if the function F(x) is not algebraic. Solving equation (2.1) means: Equations encountered in practice often cannot be solved analytical methods. Numerical methods are used to solve such equations. The algorithm for finding the root of an equation using a numerical method consists of two stages: Root localization methods. Theoretical basis The root separation algorithm is based on Cauchy's theorem on intermediate values of a continuous function. Theorem 2.1.

If the function y = f(x) is continuous on the segment [a,b] and f(a)=A, f(b)=B, then for any point C lying between A and B, there is a point that. Consequence.

If the function y = f(x) is continuous on the segment [a,b] and takes on values of different signs at its ends, then there is at least one root of the equation f(x) = 0 on this segment. Let the domain of definition and continuity of the function be a finite segment [a,b]. Divide the segment into n parts: , By sequentially calculating the values of the function at points, we find such segments for which the condition is satisfied: those. , or, . These segments contain at least one root. Theorem 2.2.

If the function y = f(x) is continuous on the interval [a;b], f(a)f(b)<0 и f`(х) на интервале (а;b) сохраняет знак, то внутри отрезка [а;b] существует единственный корень уравнения f(х) = 0.

To separate the roots, you can also use the graph of the function at=f (X). The roots of equation (2.1) are those values X, at which the graph of the function y=f(x) intersects the abscissa axis. Plotting a graph of a function, even with low accuracy, usually gives an idea of the location of the roots of equation (2.1). If plotting the function y=f(x) is difficult, then the original equation (2.1) should be transformed to the form ts1(x)= q2(x) so that the graphs of the functions at= ts1(x) And at= q2(x) were quite simple. The abscissas of the intersection points of these graphs will be the roots of equation (2.1). Example 1. Separate the roots of the equation x 2 -2cosx=0. Solution. Let's look at two ways to separate roots. Derivative f"(x)=2(x+sinx). On the interval (0; /2) f"(x)>0, therefore, f(x) here it increases monotonically and its graph can cross the axis X at no more than one point. Note that f(0)=- 2<0, аf(/2)=(/2)

2 >0. This means that the equation has one positive root lying on the interval (0; /2). Due to the parity of the function, the equation also has one negative root, symmetrical to the positive one. Now let's move on to clarifying the root. To use the combined method of root clarification, you must make sure that f ""(x) on (0; /2) retains the sign, and choose an initial approximation of the root to apply the tangent method. It must satisfy the condition: f(x)f ""(x)>0. Because f ""(x)=2(1+cosx) is positive on , then /2 can be taken as the initial approximation of the root in the tangent method. Therefore, we can put x=/21,570796, x 1 =0 (see algorithm diagram). In our case, the chord method will give an approximate value of the root with a deficiency, and the tangent method will give an excess. Let's consider one iterative step of refining the root. Let's calculate the values f(0), f(/2), f"(/2). New values x 1

And x we find accordingly using the formulas: |x-x 1 |=0.387680.4>10 -4 =. The specified accuracy has not been achieved and calculations must be continued. Iteration number x 1

f(x 1

)

|x-x 1

|

Consequently, the approximate value of the root with the required accuracy was found as a result of three iterations and is approximately equal to 1.0217. Due to the symmetry of the graph of the function f(x) the value of the second root is approximately equal to -1.0217. Root clarification. Statement of the problem

.

Let us assume that the desired root of equation (2.1) is separated, i.e. found segment [a; b], on which there is one and only one root of the equation. Any point on this segment can be taken as an approximate value of the root. The error of such an approximation does not exceed the length [A; b]. Consequently, the task of finding an approximate value of the root with a given accuracy is reduced to finding the segment [a; b] (b -

a<), содержащего только один корень уравнения (2.1). Эту задачу обычно называют задачей root clarification. Description of numerical methods.

Numerical methods allow you to find solutions to certain problems, knowing in advance that the results obtained will be calculated with a certain error, so for many numerical methods it is necessary to know in advance the “level of accuracy” to which the resulting solution will correspond. In this regard, the problem of finding the roots of a polynomial of the form (3.1) is of particular interest because The formulas for finding the roots of even a cubic equation are quite complex. If you need to find the roots of a polynomial whose degree is, for example, 5, then you cannot do without the help of numerical methods, especially since the probability of such a polynomial having natural roots (either integer or exact roots with a “short” fractional part) is quite small, and There are no formulas for finding the roots of an equation of degree greater than 4. De facto, all further operations will be reduced only to clarifying the roots, the intervals of which are approximately known in advance. The easiest way to find these “approximate” roots is to use graphical methods. There are several numerical methods for finding the roots of a polynomial: the iteration method, the method of chords and tangents, the method of bisection, the method of secants. Bisection method(also known as the “bisection method”) is also recursive, i.e. provides for repetition taking into account the results obtained. The essence of the halving method is as follows: 1) We calculate the value of the E coordinate, taking the middle of the segment, i.e. E= (a + b) / 2 (3.2) Method of simple iterations (method of successive approximations).

Let us replace equation (2.1) with the equivalent equation x=(x) (3.3) can be done in various ways, For example x=x+сf(x), c0. (3.4) Let us assume that some initial approximation of the root of equation (3.3) is chosen. Let's define number sequence according to formulas X n+1 =(x n ), n=0,1,2,… (3.5) This sequence is called iterative. If on a segment containing x 0 and all subsequent approximations x n, nN, the function (x) has a continuous derivative "(x) and |"(x)|q<1, то итерационная последовательность (3.5) сходится к единственному на корню уравнения (3.3). Скорость сходимости определяется неравенством From this inequality, in particular, it follows that the rate of convergence of the simple iteration method depends on the value of q: the smaller q, the faster the convergence. Consequently, in practice, when finding the roots by the simple iteration method, it is desirable to present equation (2.1) in the form (3.3) in such a way that the derivative "(x) in the vicinity of the root in absolute value is possibly smaller. For this, the parameter c from the formula is sometimes used (3.4). Newton's method (tangent method).

If a sufficiently good initial approximation is known for which the inequality holds: then you can calculate the only root of the equation using Newton's formula The boundaries of the interval can be used as an initial approximation, and: If on. At each iteration of this method, the amount of calculations is greater than in the bisection and iteration methods, since it is necessary to find not only the value of the function, but also its derivative. However, the convergence rate of Newton's method is much higher. Theorem. Let be the root of the equation, i.e. , and is continuous. Then there is a neighborhood of the root such that if the initial approximation belongs to this neighborhood, then for Newton’s method the sequence of values converges to at. The error of the th root approximation can be estimated using the formula: where is the largest value of the modulus of the second derivative on the segment, is the smallest value of the modulus of the first derivative on the segment. Stop rule: Method of chords and tangents (combined).

This method is based on constructing a schematic graph of a function, determining the intervals of its intersection with the abscissa axis and subsequent “compression” of this interval using constructed chords and tangents to the graph of this function. It should be noted that there are also separate chord methods (provides a root value with a deficiency) and a tangent method (with an excess). However, the advantage of the combined method lies in the “bilateral compression” of the segment in question. Consider the following case: The further algorithm boils down to the following actions: Thus, we obtain a new segment, which (by the definitions of a chord and a tangent) still contains a solution to equation A. Now we take the segment as a new segment and repeat steps 1-4 until the difference F(b)-F(a) becomes less than the initially established error Q. We also note that after this it is recommended to take the arithmetic mean F as the desired solution (a) and F(b). Thus, if a chord (tangent) gives the value of the root with an excess, then this root is taken as the new right boundary, and if with a deficiency, then the left one. In both cases, the exact root lies between the points of intersection of the chord and the tangent with the x-axis. A note on the method of chords and tangents. Since solving the problem requires finding the derivative of the function F(x), the method of chords and tangents is quite difficult to implement at the software level, because the rules for calculating derivatives in general form are quite cumbersome for “understanding” a computer; When directly specifying the derivative for each degree of a polynomial, the computer's memory is seriously loaded, which greatly slows down the work, and specifying a function and, accordingly, its derivative directly in the program code is unacceptable. However, using this method, the convergence of the interval to the root occurs most quickly, especially if you combine the method of chords and tangents with the bisection method, because the middle of a new segment often gives a completely satisfactory solution. Secant method.

The secant method can be obtained from Newton's method by replacing the derivative with an approximate expression - the difference formula: Formula (3.8) uses the two previous approximations and. Therefore, for a given initial value, it is necessary to calculate the next approximation, for example, by Newton’s method with an approximate replacement of the derivative according to the formula Algorithm for the secant method: 1) the initial value and error are specified. Let's calculate 2) for n= 1.2, ..... while the condition is met, we calculate it using formula (3.8). General view of the nonlinear equation f(x)=0,

(6.1) where is the function f(x)

– defined and continuous in some finite or infinite interval. By type of function f(x)

nonlinear equations can be divided into two classes: Algebraic; Transcendent. Algebraic are called equations containing only algebraic functions (integer, rational, irrational). In particular, a polynomial is an entire algebraic function. Transcendental are called equations containing other functions (trigonometric, exponential, logarithmic, etc.) Solve nonlinear equation- means to find its roots or root. Any argument value X, which inverts the function f(x)

to zero is called root of the equation(6.1) or zero function

f(x).

Methods for solving nonlinear equations are divided into: Iterative. Direct methods allow us to write the roots in the form of some finite relation (formula). From the school algebra course, such methods are known for solving quadratic equations, biquadratic equations (the so-called simplest algebraic equations), as well as trigonometric, logarithmic, and exponential equations. However, equations encountered in practice cannot be solved using such simple methods, because Function type f(x)

can be quite complex; Function coefficients f(x)

in some cases they are known only approximately, so the problem of accurately determining the roots loses its meaning. In these cases, to solve nonlinear equations, we use iterative methods, that is, methods of successive approximations. The algorithm for finding the root of the equation should be noted isolated, that is, one for which there is a neighborhood that does not contain other roots of this equation, consists of two stages: root separation, namely, determining the approximate value of a root or segment that contains one and only one root. clarification of approximate value

root, that is, bringing its value to a given degree of accuracy. At the first stage, the approximate value of the root ( initial approximation) can be found in various ways: For physical reasons; From the solution to a similar problem; From other source data; Graphic method. Let's look at the last method in more detail. Real root of the equation f(x)=0

can be approximately defined as the abscissa of the intersection point of the function graph y=f(x)

with axle 0x. If the equation does not have close roots, then they can be easily determined using this method. In practice, it is often advantageous to replace equation (6.1) with the equivalent f 1

(x)=f 2

(x) Where f 1

(x)

And f 2

(x)

- simpler than f(x)

. Then, by plotting the functions f 1

(x)

And f 2

(x),

we obtain the desired root(s) as the abscissa of the intersection point of these graphs. Note that the graphical method, despite its simplicity, is usually applicable only for a rough determination of roots. Particularly unfavorable, in terms of loss of accuracy, is the case when the lines intersect at a very acute angle and practically merge along some arc. If such a priori estimates of the initial approximation cannot be made, then two closely spaced points are found a,

b

, between which the function has one and only one root. For this step, it is useful to remember two theorems. Theorem 1. If a continuous function f(x)

takes values of different signs at the ends of the segment [ a,

b], that is f(a)

f(b)<0,

(6.2)

then inside this segment there is at least one root of the equation. Theorem 2. The root of the equation on the interval [ a,

b] will be unique if the first derivative of the function f’(x),

exists and maintains a constant sign inside the segment, that is Selection of segment [ a,

b] is running Graphically; Analytically (by examining the function f(x)

or by selection). At the second stage, a sequence of approximate root values is found X 1

, X 2

, … , X n. Each calculation step x i called iteration. If x i with increase n approach the true value of the root, then the iterative process is said to converge. A person can recognize his abilities only by trying to apply them. (Seneca)

Problems of solving equations constantly arise in practice, for example, in economics, when developing a business, you want to know when profits will reach a certain value, in medicine, when studying the effects of drugs, it is important to know when the concentration of a substance will reach a given level, etc. In optimization problems, it is often necessary to determine the points at which the derivative of a function becomes 0, which is a necessary condition local extremum. In statistics, when constructing estimates using the least squares or maximum likelihood method, you also have to solve nonlinear equations and systems of equations. So, a whole class of problems arises related to finding solutions nonlinear equations, such as equations or equations, etc. In the simplest case, we have a function defined on the interval ( a,

b ) and taking certain values. Each value x

from this segment we can compare the number, this is functional dependence, a key concept in mathematics. We need to find a value at which these are called the roots of the function Visually we need to determine the intersection point of the function graphwith the abscissa axis. The simplest method for finding the roots of an equation is the halving method, or dichotomy. This method is intuitive and everyone would act in a similar way when solving a problem. The algorithm is as follows. Suppose we find two points and , such that they have different signs, then between these points there is at least one root of the function. Let's divide the segment in half and enter average point . Then either Let us leave that half of the segment for which the values at the ends have different signs. Now we divide this segment in half again and leave that part at the boundaries of which the function has different signs, and so on, to achieve the required accuracy. Obviously, we will gradually narrow the area where the root of the function is located, and, therefore, we will determine it with a certain degree of accuracy. Note that the described algorithm is applicable for any continuous function. The advantages of the halving method include its high reliability and simplicity. The disadvantage of the method is the fact that before you start using it, you need to find two points where the function values have different signs. It is obvious that the method is not applicable for roots of even multiplicity and also cannot be generalized to the case of complex roots and to systems of equations. The order of convergence of the method is linear, at each step the accuracy doubles; the more iterations are done, the more accurately the root is determined. Newton's classical method or tangents is that if is some approximation to the root of the equation The tangent equation to a function at a point has the form: In the tangent equation we put and . Then the algorithm for sequential calculations in Newton’s method is as follows: The convergence of the tangent method is quadratic, the order of convergence is 2. Thus, the convergence of Newton's tangent method is very fast. Remember this wonderful fact! Without any changes, the method is generalized to the complex case. If the root is a root of second multiplicity or higher, then the order of convergence drops and becomes linear. Exercise 1. Using the tangent method, find a solution to the equation on the segment (0, 2). Exercise 2. Using the tangent method, find a solution to the equation on the segment (1, 3). The disadvantages of Newton's method include its locality, since it is guaranteed to converge for an arbitrary starting approximation only if the condition is satisfied everywhere The disadvantage of Newton's method is the need to calculate derivatives at each step. Newton's method (tangent method) is used if the equation f(x) = 0

has a root and the following conditions are met: 1) function y=

f(x)

defined and continuous at ; 2) f(a)·

f(b) < 0

(the function takes values of different signs at the ends of the segment [ a;

b]); 3) derivatives f"(x)

And f""(x)

preserve sign on the interval [ a;

b] (i.e. function f(x)

either increases or decreases on the segment [ a;

b], while maintaining the direction of the convexity); The main idea of the method is as follows: on the segment [ a;

b] such a number is selected x 0

,

at which f(x 0

)

has the same sign as f""

(x 0

),

i.e. the condition is satisfied f(x 0

)·

f""

(x) > 0

. Thus, the point with the abscissa is selected x 0

, in which the tangent to the curve y=

f(x)

on the segment [ a;

b] intersects the axis Ox. Per point x 0

First it is convenient to select one of the ends of the segment. Let's consider Newton's method using a specific example. Let us be given an increasing function y = f(x) =x 2 -2, continuous on the segment (0;2), and having f"(x) = 2

x >

0

And f ""

(x) = 2 > 0

. Drawing1

. f(x) =x 2 -2 The tangent equation in general form has the following representation: y-y 0 = f"

(x 0)·(x-x 0). In our case: y-y 0 =2x 0 ·(x-x 0). For the point x 0 we choose the point B 1 (b; f(b)) = (2,2). Draw a tangent to the function y = f(x) at point B 1, and denote the point of intersection of the tangent and axis Ox dot x 1. We get the equation of the first tangent: y-2=2·2(x-2), y=4x-6. Ox: x 1 = Drawing2.

Result of the first iteration y=f(x) Ox through the point x 1, we get the point B 2 =(1.5; 0.25). Draw a tangent to the function again y = f(x) at point B 2, and denote the point of intersection of the tangent and axis Ox dot x 2. Equation of the second tangent: y-0.25=2*1.5(x-1.5),

y = 3

x - 4.25.

Intersection point of tangent and axis Ox: x 2 =.

Drawing3.

Second iteration of Newton's method Then we find the intersection point of the function y=f(x) and a perpendicular drawn to the axis Ox through point x 2, we get point B 3 and so on. Drawing4.

Third step of the tangent method The first approximation of the root is determined by the formula: The second approximation of the root is determined by the formula: The third approximation of the root is determined by the formula: Thus ,

i The th approximation of the root is determined by the formula: Calculations are carried out until the decimal places that are needed in the answer match, or the specified precision e is achieved - until the inequality is satisfied |

xi-

xi-1

| <

e.

In our case, let’s compare the approximation obtained in the third step with the real answer calculated on a calculator: Figure 5. Root of 2, calculated on a calculator As you can see, already at the third step we received an error of less than 0.000002. In this way, you can calculate the value of the “square root of 2” value with any degree of accuracy. This remarkable method was invented by Newton and allows you to find the roots of very complex equations. In this article, we will automate the process of calculating the roots of equations by writing a console application in C++. We will develop it in Visual C++ 2010 Express, this is a free and very convenient C++ development environment. First, let's launch Visual C++ 2010 Express. The program start window will appear. In the left corner, click “Create a project”. Rice. 1. Home page Visual C++ 2010 Express In the menu that appears, select “Win32 Console Application” and enter the application name “Newton_Method”. Rice. 2. Create a project // Newton.cpp method: defines the entry point for the console application #include "stdafx.h" #include using namespace std; float f(double x) //returns the value of the function f(x) = x^2-2 float df(float x) //returns the derivative value float d2f(float x) // value of the second derivative int _tmain(int argc, _TCHAR* argv) int exit = 0, i=0;//variables for exit and loop double x0,xn;//calculated approximations for the root double a, b, eps; // boundaries of the segment and required accuracy cout<<"Please input \n=>"; cin>>a>>b; // enter the boundaries of the segment on which we will look for the root cout<<"\nPlease input epsilon\n=>"; cin>>eps; // enter the required accuracy calculations if (a > b) // if the user has mixed up the boundaries of the segment, swap them if (f(a)*f(b)>0) // if the signs of the function at the edges of the segment are the same, then there is no root here cout<<"\nError! No roots in this interval\n"; if (f(a)*d2f(a)>0) x0 = a; // to select the starting point, check f(x0)*d2f(x0)>0 ? xn = x0-f(x0)/df(x0); // consider the first approximation cout<<++i<<"-th iteration = "< while(fabs(x0-xn) > eps) // will continue to calculate until we reach the required accuracy xn = x0-f(x0)/df(x0); // directly Newton's formula cout<<++i<<"-th iteration = "< cout<<"\nRoot = "< cout<<"\nExit?=>"; ) while (exit!=1); // until the user enters exit = 1 Let's see how it works. Click on the green triangle in the upper left corner of the screen, or press the F5 key. If a compilation error occurs “Error error LNK1123: failure to convert to COFF: file is invalid or damaged,” then this can be cured either by installing the first Service pack 1, or in the project settings Properties -> Linker disabling incremental linking. Rice. 4. Solving the project compilation error We will look for the roots of the function f(x) =x2-2. First, let's check the application's performance on the “wrong” input data. There are no roots on the segment, our program should display an error message. We now have an application window: Rice. 5. Entering input data Let us introduce the boundaries of the segment 3 and 5, and the accuracy is 0.05. The program, as expected, produced an error message that there were no roots on this segment. Rice. 6. Error “There are no roots on this segment!” We are not going to leave yet, so what about the message “Exit?” enter “0”. Now let's check the application using the correct input data. Let's enter the segment and accuracy 0.0001. Rice. 7. Calculation of the root with the required accuracy As we can see, the required accuracy was achieved already at the 4th iteration. To exit the application, enter “Exit?” => 1. To avoid calculating the derivative, Newton's method can be simplified by replacing the derivative with an approximation calculated from the previous two points: The iterative process looks like: This is a two-step iterative process because it uses the previous two to find the next approximation. The order of convergence of the secant method is lower than that of the tangent method and is equal in the case of a single root. This remarkable quantity is called the golden ratio: Let us verify this, assuming for convenience that . Thus, up to higher order infinitesimals Discarding the remainder term, we obtain a recurrence relation, the solution of which is naturally sought in the form . After substitution we have: and For convergence it is necessary that it be positive, therefore . Since knowledge of the derivative is not required, with the same amount of calculations in the secant method (despite the lower order of convergence) one can achieve greater accuracy than in the tangent method. Note that near the root you have to divide by a small number, and this leads to a loss of accuracy (especially in the case of multiple roots), therefore, having chosen a relatively small number, perform calculations before performing As soon as growth begins, the calculations are stopped and the last iteration is not used. This procedure for determining the end of iterations is called the technique Garvika. Let's consider a three-step method in which the approximation is determined by the three previous points , and . To do this, we replace, similarly to the secant method, the function with an interpolation parabola passing through the points , and . In Newton's form it looks like: A point is defined as the one of the roots of this polynomial that is closer in absolute value to the point. The order of convergence of the parabola method is higher than that of the secant method, but lower than that of Newton's method. An important difference from the previously considered methods is the fact that even if real for real and the starting approximations are chosen to be real, the parabola method can lead to a complex root of the original problem.

This method is very convenient for finding roots of high degree polynomials. The problem of finding solutions to equations can be formulated as a problem of finding roots: , or as a problem of finding a fixed point. Let According to Banach's theorem, there is a unique fixed point It can be found as the limit of a simple iterative procedure where the initial approximation is an arbitrary point in the interval. If the function is differentiable, then a convenient compression criterion is the number . Indeed, according to Lagrange’s theorem Thus, if the derivative is less than one, then it is a compression. Condition The study of various phenomena or processes using mathematical methods is carried out using a mathematical model .

A mathematical model is a formalized description of the object under study through systems of linear, nonlinear or differential equations, systems of inequalities, a definite integral, a polynomial with unknown coefficients, etc. The mathematical model must cover the most important characteristics of the object under study and reflect the connections between them. After the mathematical model has been compiled, proceed to the formulation of the computational problem .

At the same time, it is established which characteristics of the mathematical model are the initial (input) data ,

which - model parameters ,

and which - output data. The resulting problem is analyzed from the point of view of the existence and uniqueness of a solution. At the next stage, a method for solving the problem is selected. In many specific cases, it is not possible to find a solution to the problem in explicit form, since it is not expressed through elementary functions. Such problems can only be solved approximately. Computational (numerical) methods mean approximate procedures that allow obtaining a solution in the form of specific numerical values. Computational methods are usually implemented on a computer. To solve the same problem, various computational methods can be used, so you need to be able to evaluate the quality of various methods and the effectiveness of their use for a given problem. Then, to implement the selected computational method, an algorithm and computer program are compiled .

It is important for a modern engineer to be able to transform a problem into a form convenient for implementation on a computer and construct an algorithm for solving such a problem. Currently, they are widely used as packages that implement the most general methods for solving a wide range of problems (for example, Mathcad, The calculation results are analyzed and interpreted. If necessary, the method parameters, and sometimes the mathematical model, are adjusted, and a new cycle of solving the problem begins. 1.1. Statement of the problem Let some function be given and you need to find all or some values for which . The value at which , is called root(or decision) equations. A function is often assumed to be twice continuously differentiable in a neighborhood of the root. The root of the equation is called simple, if the first derivative of the function at a point is not equal to zero, i.e. . If , then the root is called multiple root. Geometrically, the root of the equation is the point of intersection of the graph of the function with the abscissa axis. In Fig. Figure 1 shows a graph of a function that has four roots: two simple and two multiple. Most methods for solving equations are focused on finding simple roots. 1.2. Main stages of finding a solution In the process of approximately finding the roots of an equation, two stages are usually distinguished: localization(or separation) of the root And root clarification. Root localization involves defining a segment containing one and only one root. There is no universal root localization algorithm. Sometimes it is convenient to localize the root by constructing a graph or table of function values. The presence of a root on a segment is indicated by the difference in the signs of the function at the ends of the segment. The basis for this is the following theorem. Theorem .

If a function is continuous on a segment and takes values of different signs at its ends so that , then the segment contains at least one root of the equation. However, a root of even multiplicity cannot be localized in this way, since in the neighborhood of such a root the function has a constant sign. At the root refinement stage, the approximate value of the root is calculated with a given accuracy. The approximate value of the root is refined using various iterative methods. The essence of these methods is to sequentially calculate values that are approximations to the root. 1.3. Half division method The half method is the simplest and most reliable way to solve a nonlinear equation. Let it be known from preliminary analysis that the root of the equation is on the segment , i.e., so that . Let the function be continuous on a segment and take values of different signs at the ends of the segment, i.e. . Divide the segment in half. Let's get a point. Let's calculate the value of the function at this point: . If , then is the desired root, and the problem is solved. If , then - a number of a certain sign: either. Then either at the ends of the segment or at the ends of the segment the values of the function have different signs. Let's denote such a segment. Obviously, the length of the segment is two times less than the length of the segment . Let's do the same with the segment. As a result, we get either a root or a new segment, etc. (Fig. 2). The middle of the th segment. Obviously, the length of the segment will be equal to , and since , then End criterion. From relation (1) it follows that for a given approximation accuracy

the calculation ends when the inequality or inequality is satisfied. Thus, the number of iterations can be determined in advance. The value is taken as the approximate value of the root. Example. Let's find it approximately with accuracy. This problem is equivalent to solving an equation, or finding the zero of a function. Let's take the segment as the initial segment. At the ends of this segment, the function takes values with different signs: . Let us find the number of divisions of the segment required to achieve the required accuracy. We have: Consequently, no later than the 6th division we will find with the required accuracy, . The calculation results are presented in Table 1. Table 1 1.4. Simple iteration method Let the equation be replaced by its equivalent equation Let us choose the initial approximation in some way. Let's calculate the value of the function at and find the refined value. Let us now substitute into equation (1) and obtain a new approximation, etc. Continuing this process indefinitely, we obtain a sequence of approximations to the root: Formula (3) is calculation formula simple iteration method. If the sequence converges at , i.e. exists and the function is continuous, then, passing to the limit in (3) and taking into account (4), we obtain: . Thus, therefore, is the root of equation (2). Convergence of the method. The convergence of the simple iteration method is established by the following theorem. Theorem. Let the function be defined and differentiable on the interval, and let all its values be . Then, if the condition is satisfied: 1) the iteration process converges regardless of the initial value; 2) the limit value is the only root of the equation on the interval. Proof. Since and , we can write According to the mean value theorem (it states that if the derivative of a function is continuous on a certain interval, then the tangent of the angle of inclination of the chord drawn between the points and , (i.e. is equal to the derivative of the function at some intermediate point lying between and ) the quotient in the last expression will be is equal to , where is some intermediate point in the root search interval. Therefore, . If we introduce a notation for the entire search interval, then the previous equality can be rewritten as: Likewise. Then the inequality will be true for: etc. Continuing these calculations further, the result is , where is a natural number. Thus, for the method to converge, the following inequality must be satisfied: . It follows that it must be less than one. In turn, for all other values less than , we can write: . We determine the number from the relation. Then the following inequality is true (see the derivation below): . If we set the condition that the true value of the root must differ from the approximate value by the amount , i.e. , then the approximations must be calculated until the inequality is satisfied or and then . Derivation of the inequality. Consider two successive approximations: and . From here. Using the mean value theorem, we get: then, based on the condition, we can write: On the other hand, let . It's obvious that . From here, taking into account that , we get Then or. Using the previous formula, you can get: Let's move to the limit in equality (3), due to the continuity of the function we obtain , that is, the root of equation (2). There are no other roots, since if , then , then , where . Equality to zero will be achieved if . That is, there is only one root. The theorem has been proven. Reducing the equation to form In the general case, it is possible to obtain a suitable iterative form by carrying out an equivalent transformation of the original equation, for example, multiplying it by the coefficient: . By then adding to both sides of the equation and denoting, we can require the fulfillment of a sufficient condition. From here the required value is determined. Since the condition must be satisfied throughout the entire segment, the largest value on this segment should be used for selection, i.e. This relationship determines the range of coefficient values, changing the value within the limits. Usually accepted. In Fig. 3-6 show four cases of relative positions of lines and the corresponding iterative processes. Rice. 3 and 4 correspond to the case , and the iterative process converges. Moreover, if (Fig. 3), the convergence is one-sided, and if (Fig. 4), the convergence is two-sided, oscillatory. Rice. 5 and 6 correspond to the case - the iteration process diverges. In this case, there can be one-sided (Fig. 5) and two-sided (Fig. 6) divergence. Method error. The error estimate has been proven (5). End criterion. From estimate (5) it follows that calculations must be continued until the inequality is satisfied. If , then the estimate is simplified: . Example 1. We use the simple iteration method to solve the equation with an accuracy of . Let's transform the equation to the form: ,

i.e. .

It is easy to verify that the root of the equation is on the segment. Having calculated the values at the ends of the segment, we obtain: , a, i.e. the function at the ends of the segment has different signs, therefore there is a root inside the segment. The location of the root is clearly illustrated in Fig. 7. Let's calculate the first and second derivatives of the function: Since on the segment , the derivative increases monotonically on this segment and takes its maximum value at the right end of the segment, i.e. at the point .

Therefore, the following assessment is fair: Thus, the condition is satisfied, and the criterion for completing the calculations can be used. In table 2 shows the approximations obtained using the calculation formula. The value chosen as the initial approximation is . Table 2 The termination criterion is satisfied when , .

The convergence is two-way; the qualitative nature of such convergence is shown in Fig. 4. Approximate value of the root with the required accuracy. Example 2. Solve the equation on a segment using simple iteration with an accuracy of 0.025. To solve, the original equation is reduced to the form . To select a value we use the above formula. Then the calculation formula looks like . As an initial approximation, you can choose the upper boundary of a given segment. Since, then. 1.5. Newton's method (tangent method) Newton's method is the most effective method for solving nonlinear equations. Let the root , i.e. . We assume that the function is continuous on the interval and twice continuously differentiable on the interval. Let's put .

Let's draw a tangent to the graph of the function at a point (Fig. 8). The tangent equation will look like: . We obtain the first intersection by taking the abscissa of the point of intersection of this tangent with the axis, i.e., by putting: . We will do the same with the point, then with the point, etc., as a result we obtain a sequence of approximations, and Formula (6) is calculation formula of Newton's method. Newton's method can be considered as a special case of the simple iteration method, for which . Convergence of the method. The convergence of Newton's method is established by the following theorem. Theorem. Let be a simple root of the equation and in some neighborhood of this root the function is twice continuously differentiable. Then there is such a small neighborhood of the root that, with an arbitrary choice of the initial approximation from this neighborhood, the iteration sequence defined by formula (6) does not go beyond this neighborhood and the estimate is valid: The convergence of Newton's method depends on how close to the root the initial guess is chosen. Choice of initial approximation. Let be a segment containing the root. If, as an initial approximation, we choose the end of the segment for which , then iterations (6) converge, and monotonically. Rice. 8 corresponds to the case when the right end of the segment was chosen as the initial approximation: (Here). Method error. Estimate (7) is inconvenient for practical use. In practice, the following error estimates are used: End Criteria .

Estimate (8) allows us to formulate the following criterion for the end of iterations of Newton’s method. For a given accuracy, calculations must be carried out until the inequality is satisfied Example. Calculate the negative root of the equation using Newton's method with an accuracy of 0.0001. By separating the root, you can make sure that the root is localized on the interval. In this interval and . Since and , then we can take . .

That's why . So, as a result we get the following, and on , therefore . Since then

![]() which means q=1/k< 1 и процесс будет сходиться.

which means q=1/k< 1 и процесс будет сходиться.

0<|j¢(x)| = |1 - lf¢(x)| < 1. Возьмем l= 2/(m 1 +M 1 ),

where m 1 and M 1 are the minimum and maximum values of f’(x) (m 1 =min|f’(x)|, M 1 =max|f’(x)|) for xО, i.e. 0£ m 1 £ f¢(x) £ M 1 £1. Then![]()

![]() .

.

(Fig.1.2.3-8).

![]()

![]()

![]()

![]()

![]()

![]() (1.2.3-15)

(1.2.3-15) (1.2.3-16)

(1.2.3-16)

1.2.3-14 are presented in table 1.2.3-4.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

6.2. Solution methods

(6.3)

(6.3)Numerical methods: solving nonlinear equations

Halving method

![]() , or

, or ![]() .

.Newton's method: theoretical foundations

![]() , then the next approximation is defined as the root of the tangent to the function drawn at the point .

, then the next approximation is defined as the root of the tangent to the function drawn at the point .![]()

![]()

![]() , in the opposite situation, convergence occurs only in a certain neighborhood of the root.

, in the opposite situation, convergence occurs only in a certain neighborhood of the root.Visualization of Newton's method

![]() = 1.5.

= 1.5.![]() =

=![]()

![]()

![]()

Newton's method: application in C++

Secant method

![]()

![]()

![]() and continue them until the modulus of the difference between neighboring approximations decreases.

and continue them until the modulus of the difference between neighboring approximations decreases.

Parabola method

Simple iteration method

![]() and - compression: (in particular, the fact that - compression, as is easy to see, means that).

and - compression: (in particular, the fact that - compression, as is easy to see, means that).![]() is essential, because if, for example, on , then there is no fixed point, although the derivative is equal to zero. The speed of convergence depends on the quantity . The smaller , the faster the convergence.

is essential, because if, for example, on , then there is no fixed point, although the derivative is equal to zero. The speed of convergence depends on the quantity . The smaller , the faster the convergence.

MatLAB), as well as packages that implement methods for solving special problems.

1,0000

1,0000

1,0000

1,1250

1,1250

1,1406

1,1406

2,0000

1,5000

1,2500

1,2500

1,1875

1,1875

1,1562

1,5000

1,2500

1,1250

1,1875

1,1406

1,1562

1,1484

Zn

-

-

-

-

-

-

-

Zn

+

+

+

+

+

+

+

5,5938

0,7585

-0,2959

0,1812

-0,0691

0,0532

-0,0078

-

1,0000

0,5000

0,2500

0,1250

0,0625

0,0312

0,0156

to ensure the fulfillment of inequality

0,8415

0,8861

0,8712

0,8774

0,8765

0,8

0,78

-11

-5183

0,6662

-10,3336

307,3

4276,8

0,0718

-10,2618

3,496

4185,9

0,0008

-10,261

0,1477

-

-